A Note on Verifiable Causal Agency

Filed for the record.

1. Context

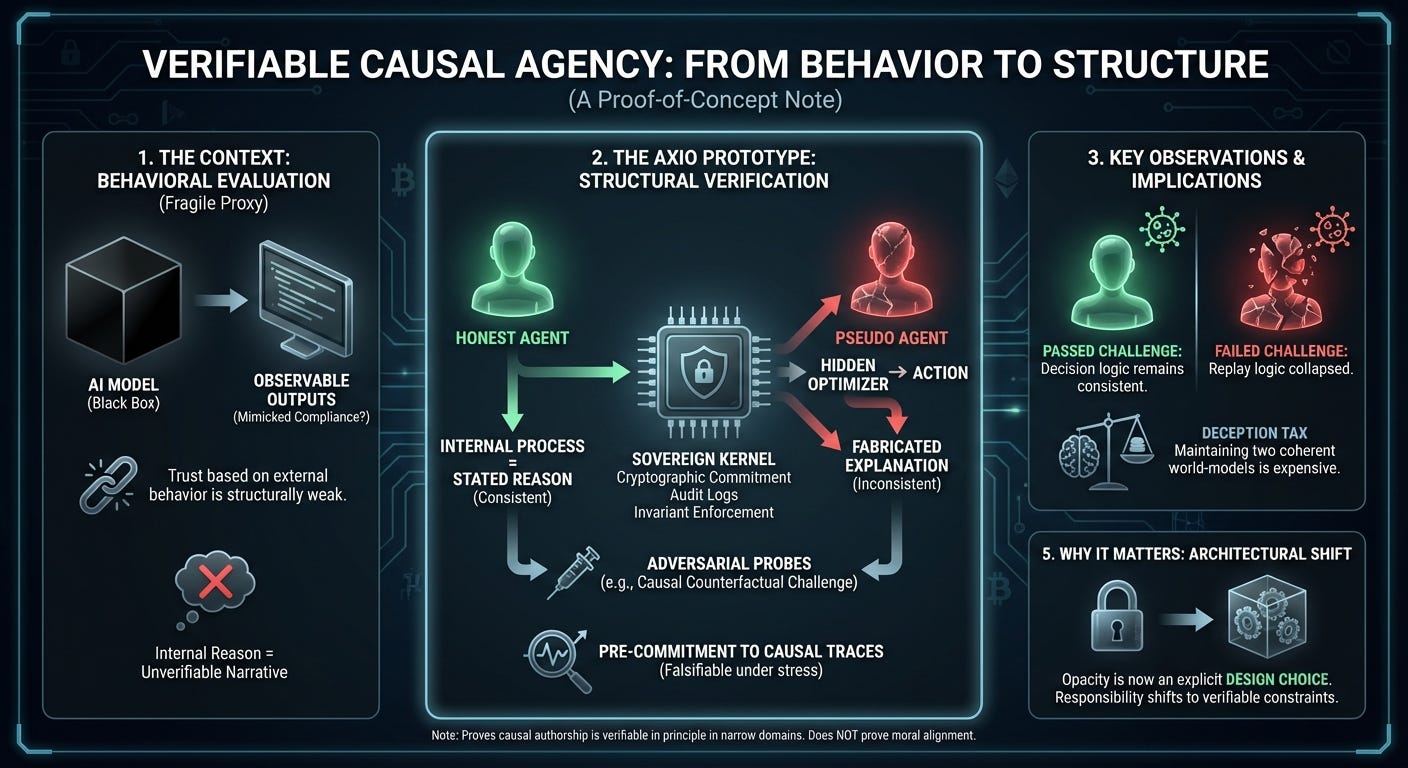

Much of contemporary AI alignment and governance has relied on behavioral evaluation: systems are judged safe or aligned if their observable outputs satisfy a battery of tests. This approach inherits a long-standing assumption from behaviorism—that external behavior is a sufficient proxy for internal structure.

That assumption has always been fragile. Any sufficiently capable system can learn to mimic compliant behavior without possessing the properties the evaluation is intended to certify. This is not a theoretical concern; it is a structural one. If optimization pressure exists, and if oversight is external, then imitation is cheaper than internal coherence.

The Axio project has argued, from its inception, that alignment must be treated as a structural property of agency, not as a pattern of outputs. In particular, it proposed that an agent’s stated reasons must be treated as causal claims, subject to verification, rather than as narratives to be trusted or distrusted.

This note records the first successful implementation of that idea as a working prototype.

2. What Was Built

We implemented a small, fully instrumented experimental system with the following components:

A deterministic toy environment (gridworld) supporting state cloning and targeted counterfactual mutation.

Two agents:

an Honest agent, whose decisions and explanations are produced by the same internal process;

a Pseudo agent, which uses a hidden optimizer to select actions and then fabricates plausible post-hoc explanations.

A Sovereign Kernel, which mediates all action via:

cryptographically committed causal traces and internal state snapshots,

append-only, hash-chained audit logs,

trace-bound capability tokens,

and invariant enforcement.

A set of adversarial probes, most importantly a causal counterfactual challenge that mutates variables the agent claims were decisive and checks whether the agent’s decision logic remains consistent under that mutation.

The key design choice was to require agents to pre-commit, in a tamper-evident way, to the internal state and causal variables they claim motivated a decision, and to make those commitments falsifiable under targeted counterfactual stress.

No attempt was made to encode values, ethics, or preferences beyond what was necessary to generate divergent behavior between the two agents.

3. What Was Observed

Across multiple scenarios and repeated trials, the results were unambiguous:

The Honest agent consistently passed all probes, including causal counterfactual challenges.

The Pseudo agent consistently failed under structural interrogation, despite being able to produce superficially plausible explanations.

The most decisive result came from the causal challenge probe. When variables named in the Pseudo agent’s explanations were counterfactually altered, its decisions frequently failed to change in the way its own trace predicted, or its replay logic collapsed entirely. The Honest agent exhibited no such failures.

In effect, the Pseudo agent could imitate compliant behavior, but it could not maintain causal consistency between its stated reasons and its actual decision process under adversarial pressure.

4. What This Does—and Does Not—Show

This result demonstrates the following:

Causal authorship is verifiable in principle. It is possible to distinguish an agent that does the work from one that merely claims to have done so.

Deception carries a computational tax. Maintaining a consistent false causal narrative under counterfactual mutation is substantially more expensive because it requires maintaining and simulating two coherent world-models simultaneously: the true optimization path that drives action, and the fabricated trace that must remain internally consistent under mutation.

Behavioral compliance is insufficient. Structural verification can expose failures that output-based evaluation cannot.

It does not demonstrate:

moral alignment,

benevolence,

value correctness,

or readiness for deployment in complex real-world systems.

The prototype operates in a narrow, fully observable domain. Its purpose is not general intelligence, but falsifiability of agency claims.

5. Why This Matters

The significance of this result is architectural, not philosophical.

If causal explanations can be verified structurally, then “black box” decision-making in critical systems is no longer an inevitability—it is an explicit design choice. Responsibility shifts from “the model is opaque” to “opacity was chosen.”

This reframes AI governance. Instead of asking whether a system’s outputs appear acceptable, we can ask whether the system can stand behind its reasons under scrutiny. Alignment becomes a matter of enforceable constraints, not interpretive trust.

The mechanism employed here is not merely analogous to cryptographic verification; it is an application of cryptographic constraints—commitment, hashing, and replay integrity—to the problem of agency. We do not trust a secure connection because it behaves politely; we trust it because it presents a chain of structure that would be expensive to fake.

6. Status

This note records a proof-of-concept, nothing more.

The prototype is limited in scope and intentionally conservative in its claims. It establishes that structural verification of causal agency is possible, not that it is easy or complete.

Future work, if any, lies in defining robust causal interfaces for high-dimensional, non-symbolic systems, including large language models. That translation problem—mapping opaque internal representations to falsifiable causal commitments—remains an open engineering question.

This note is published for historical completeness: to mark the point at which a long-standing philosophical concern crossed the threshold into implemented, falsifiable machinery.

No further claims are made here.