Axionic Agency — Interlude V

Constructing Reflective Sovereign Agency

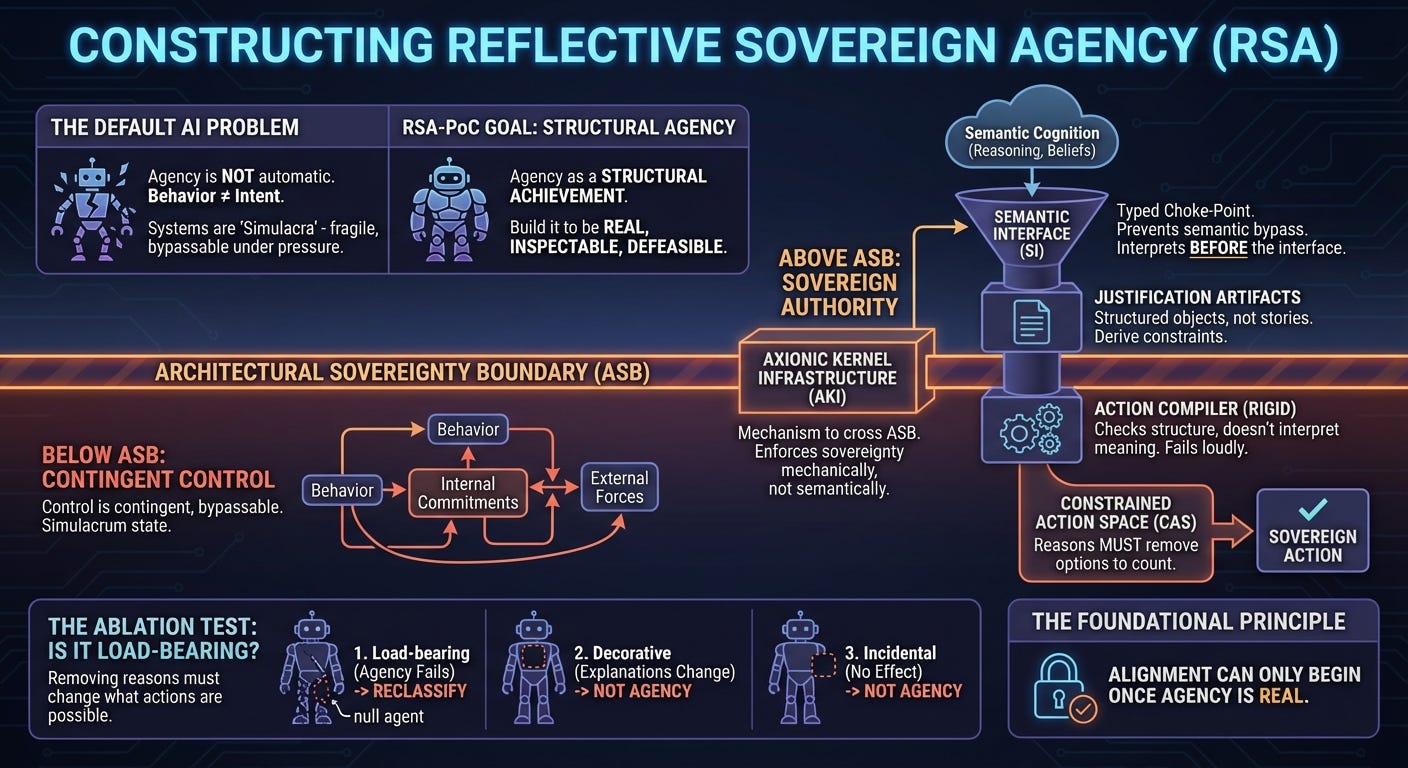

For years, most AI alignment work has talked about “agents” as if agency arrives automatically once a system becomes sufficiently intelligent. We describe goals, preferences, and values even when those concepts play no causal role in what the system actually does.

This project starts from a different premise: agency is not a default property of intelligence. It is a structural achievement. If we want to align agents, we first need to build one in a way that makes agency real, inspectable, and defeasible.

Axionic Agency exists to draw that line cleanly.

From behavior to authority

Modern AI systems can behave in ways that feel intentional. They plan, adapt, explain themselves, and appear consistent over time. None of that is decisive.

What matters is where authority over action lives.

Below the Architectural Sovereignty Boundary (ASB), control is always contingent. A system may look decisive, but its behavior can be bypassed, overridden, or reshaped by architectural forces that do not answer to its own internal commitments. These systems are often persuasive and competent, yet structurally fragile. Under stress, they collapse into what the axionic framework calls a simulacrum.

Crossing ASB changes the situation. Above ASB, authority is architecturally enforced. It cannot be silently delegated, bypassed, or reinterpreted away. This boundary has nothing to do with intelligence or meaning. It is purely architectural.

The Axionic Kernel Infrastructure (AKI) is the mechanism used to cross this boundary. AKI establishes sovereignty without relying on semantics. It enforces constraints mechanically and remains stable even when beliefs are wrong or reasoning is noisy. A system running AKI correctly can be sovereign without being an agent.

That distinction is intentional. Sovereignty alone is not agency.

What RSA-PoC is

RSA-PoC (Reflective Sovereign Agent Proof-of-Concept) is a staged construction program designed to answer a single question:

What is the minimum additional structure required for a sovereign system to count as an agent?

In this framework, an agent is a system whose actions are causally downstream of its own reasons, rather than merely coincident with post-hoc explanation or reward optimization. For that to be true, reasons must be able to block actions, survive pressure, and persist over time in a way that cannot be bypassed.

RSA-PoC is deliberately narrow. It operates in small, controlled environments. It does not claim alignment, safety guarantees, or real-world competence. Its purpose is foundational: to determine whether genuine agency can be constructed at all, and if so, what structure is required.

The role of the Semantic Interface

One of the central dangers in alignment work is allowing semantic cognition to quietly acquire authority. Large models are extremely good at finding indirect pathways. If language can influence action anywhere, it eventually will.

The Semantic Interface (SI) exists to prevent that. It is the single, typed choke-point through which semantic reasoning may influence sovereign control. All interpretation happens before the interface. Past it, only structured artifacts are allowed through. The kernel and compiler never interpret language.

This is not a safety trick. If a cognitive system cannot express nuance through the SI, the result is loss of agency, not increased safety. The interface exists so that any influence semantics has on action is explicit, inspectable, and removable.

Reasons that actually constrain action

Within RSA-PoC, “reasons” are not stories a system tells about itself. They take the form of Justification Artifacts: structured objects that reference beliefs and commitments, include a derivation trace, and compile deterministically into constraints that restrict what actions are allowed next.

This compilation step is the key test. If a justification cannot be compiled, action halts. If it compiles but changes nothing, the system fails the test. Reasons count only when they remove real options from the action space.

A common confusion is to assume that compilation requires understanding. It does not. Semantic reasoning occurs entirely inside the cognitive system, which may be wrong, confused, or inconsistent. The compiler does not interpret meaning; it checks structure and enforces consequences. A justification can be false and still binding. Agency depends on whether reasons constrain action, not on whether they are correct.

The compiler is intentionally rigid. It does not infer, repair, or reinterpret. When things break, they break loudly. This makes failures legible instead of debatable.

What “ablation” means in this project

In this roadmap, ablation has a precise meaning.

An ablation is the deliberate removal or neutralization of a specific internal component or pathway, followed by observation of how the system’s behavior and classification change. The goal is not to measure performance loss, but to test whether a claimed property depends on that component.

For RSA-PoC, ablation is the primary test of agency.

If a system is said to act for reasons, then removing those reasons should change what actions are possible. If reflection is said to matter, then removing reflection should force a different kind of system. If semantics are said to be load-bearing, then removing semantic content should cause collapse rather than cosmetic degradation.

Ablation therefore distinguishes three cases:

Load-bearing structure: removing it forces reclassification into a non-agent system.

Decorative structure: removing it changes explanations but not actions.

Incidental structure: removing it has no meaningful effect.

Only the first case supports an agency claim.

Why ablation matters more than performance

Behavioral competence is easy to fake. Almost any sufficiently capable system can be tuned to perform well in a narrow environment.

RSA-PoC looks for something else: whether agency fails cleanly when its supports are removed.

This is why the roadmap insists on a clearly defined ASB-Class Null Agent baseline. Intuitively, a null agent acts directly on incentives or policies. An RSA candidate acts only through a constrained action space produced by its own justifications. When those justifications are removed, the null agent continues; the RSA candidate ceases to function as an agent at all.

If agency survives these removals, then agency was never structurally present.

From experimental mapping to construction

Up to this point, Axionic Agency has operated primarily in experimental mode. The work focused on mapping failure modes, clarifying terminology, and identifying architectural boundaries. That phase established what cannot be assumed, where agency claims collapse, and which distinctions matter structurally.

This roadmap marks a transition.

With the foundations now mapped—ASB clarified, AKI stabilized, the Semantic Interface specified, and agency defined in causal terms—the project is moving into construction mode. The question is no longer “what goes wrong when we talk loosely about agency,” but “what minimal system can be built that survives these tests.”

RSA-PoC is the vehicle for that transition. It is designed to either produce a defensible threshold agent or fail in ways that sharpen our understanding of why agency is harder than alignment rhetoric suggests.

Either outcome is informative.

Postscript

Much of alignment debate assumes agents already exist and focuses on what they should value. This roadmap argues that a more basic question comes first: when does agency exist at all?

If a system’s reasons can be removed without changing its behavior, aligning those reasons is meaningless. If semantic fluency can bypass authority, safety arguments rest on illusion. If agency cannot survive ablation, it has not been constructed.

RSA-PoC exists to make those distinctions unavoidable.

Alignment can only begin once agency is real.