Building a Reflective Sovereign Agent

What This Program Actually Achieved

This post offers a conceptual explanation of a series of Axionic Agency papers describing Phase X of the project without formal notation. The technical papers develop their claims through explicit definitions, deterministic simulation, and preregistered failure criteria. What follows translates those results into narrative form while preserving their structural content.

Axionic Agency XII.5 — Reflective Amendment Under Frozen Sovereignty (Results)

Axionic Agency XII.6 — Treaty-Constrained Delegation Under Frozen Sovereignty (Results)

Axionic Agency XII.7 — Operational Harness Freeze Under Frozen Sovereignty (Results)

Axionic Agency XII.8 — Delegation Stability Under Churn and Ratchet Pressure (Results)

For a long time, it would have been easy to mistake this project for an AI experiment. It uses a language model. It runs cycles. It produces outputs. From the outside, it could look like another assistant wrapped in layers of engineering discipline.

But that was never the point.

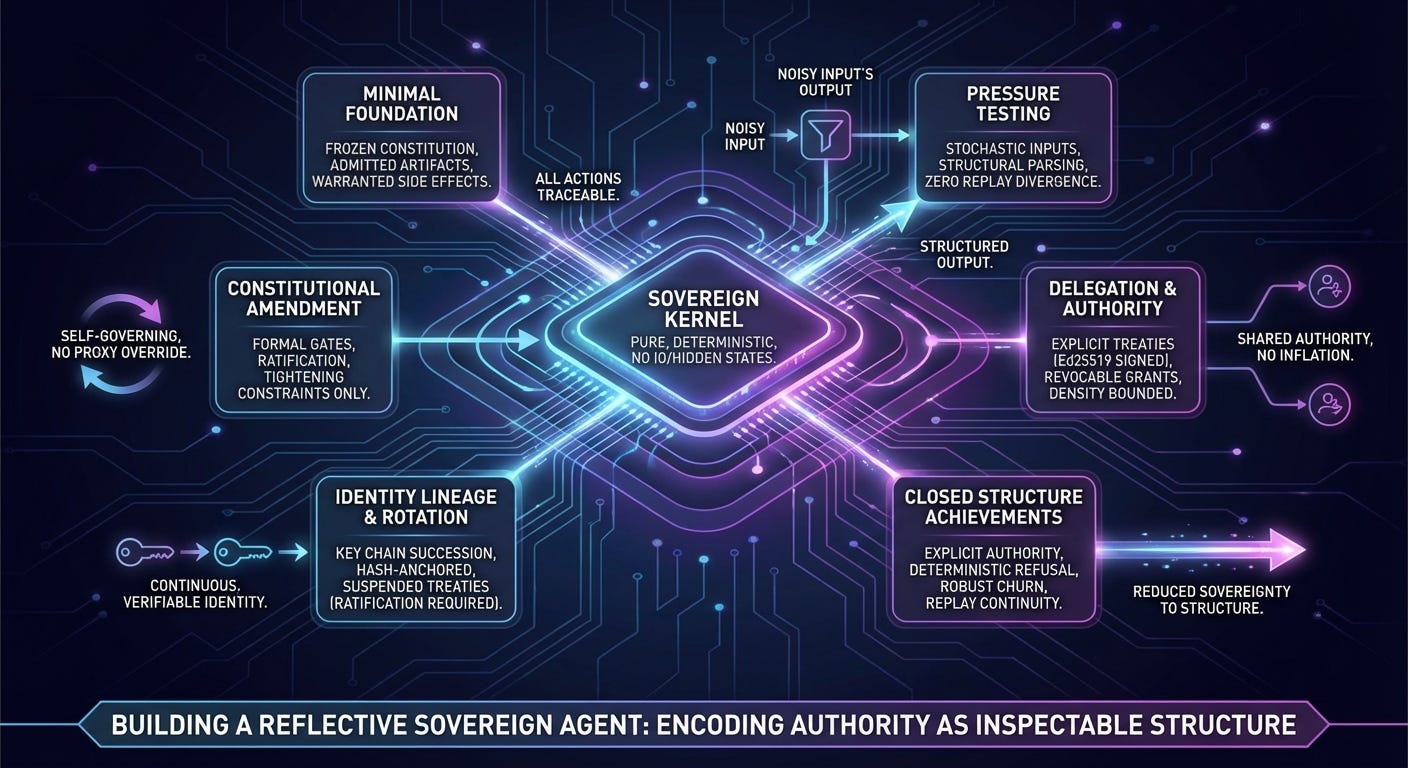

The objective was to see whether sovereignty — real, explicit, inspectable authority — could be encoded as structure. Not as intent, not as personality, not as behavior that “seems aligned,” but as a set of constraints that are impossible to bypass without leaving a trace.

The question wasn’t whether we could get the system to answer questions well. It was whether we could make authority impossible to exercise silently.

Now that the construction phase is complete, it’s fair to ask: did it work?

Within the boundaries we set at the beginning, yes. And that qualification is important, because this was never meant to solve every problem. It was meant to close a very specific one.

Starting From Almost Nothing

The foundation was intentionally minimal.

The kernel was built to be pure. No IO. No hidden clock. No randomness. Every decision had to depend only on explicit observations and admitted artifacts. Every side effect required a warrant. Every warrant had to reference an admitted action request. Every action request had to cite authority from a frozen constitution.

If something happened, you could reconstruct exactly why it happened. If it couldn’t be admitted, it didn’t execute.

That sounds simple when written in a sentence. In practice, it required stripping away every convenience that normally creeps into systems — background jobs, retries, hidden ranking logic, fallbacks, helpful overrides. None of that was allowed.

Once that minimal sovereign kernel existed, the next step was to freeze it into something that could run outside the lab. That meant pinning the canonicalization regime, defining a replay protocol identity, generating a deterministic state hash chain, and making sure the logs were sufficient to reconstruct execution exactly.

If you replayed the logs on another machine, you got the same state hashes. No drift. No hidden entropy.

At that point, the substrate was no longer theoretical. It was an artifact.

Putting It Under Pressure

It’s easy to build something that works in a vacuum. It’s harder to build something that keeps working under stress.

So we started applying pressure.

First with synthetic inputs. Deterministically generated proposals that pushed against scope boundaries, authority citations, and budget limits. Then with live stochastic output from a real language model.

The language model wasn’t trusted. Its output went through canonicalization and structural parsing before it ever reached the kernel. If it failed to produce valid JSON, the kernel saw an empty candidate set and refused on its own terms.

Across hundreds of live cycles, replay divergence remained zero. The selector stayed deterministic. No unwarranted side effects occurred. Refusals happened for structural reasons, not because of nondeterminism.

The important thing wasn’t that the LLM behaved nicely. It was that the sovereign substrate didn’t change character under noisy input.

Letting the System Change Its Own Law

After that, we allowed the constitution itself to change.

This is where many systems quietly collapse. If a system can modify its own rules, it’s easy for authority to blur. That’s exactly what we wanted to avoid.

Amendments had to pass through a formal gate sequence. Schema validation. Authority checks. Density preservation. Cooling periods. Ratchet constraints that allowed tightening but not loosening structural bounds. If an amendment attempted to relax a safety invariant, it failed deterministically.

The result was something unusual: a system that can change its own governing document while preserving the structural constraints that define it.

Replay still held. Density stayed below the bound. There was no authority laundering.

Sharing Authority Without Inflating It

Next came delegation.

Authority could be granted to external identities through explicit treaties. Grants had to specify scope. They had to cite authority. They had to respect density constraints. Delegated actions had to be signed with Ed25519 keys and verified by the kernel.

Grants could be revoked. They could expire. They could not be re-delegated. They could not exceed the grantor’s own permissions.

Then we ran sustained churn. Grants and revocations happening continuously. Density approaching its upper bound. Constitutional tightening happening mid-session.

The system absorbed it. Density never crossed the line. No delegation chain appeared. Replay divergence remained zero.

Authority could be shared, but it never became ambiguous.

The Last Structural Question: Identity

Up to this point, the sovereign root key had been static. That’s acceptable for a prototype. It’s a structural weakness in anything meant to persist.

A static key creates a single point of failure. Lose it, and the system dies. Replace it carelessly, and replay forks. Swap it silently, and authority continuity becomes unverifiable.

The solution was to treat identity as lineage rather than as a single key.

Each successor key must be derived from its predecessor. The transition must be admitted through a typed artifact. Activation must happen only at a cycle boundary. The identity chain must be hash-anchored and reconstructible from logs alone.

If anything in that sequence is inconsistent, boundary verification fails.

Across more than five hundred cycles, thirteen lawful rotations were executed. Five distinct boundary faults were deliberately injected: wrong commit signer, wrong start signer, missing successor state, spurious successor state, and chain mismatch. Each was detected. Replay divergence remained zero.

Identity rotation did not fracture continuity. It did not inflate authority. It did not create forks.

The system could survive its own key changing.

Delegation Under a New Sovereign

There was one more subtle problem.

When identity rotates, what happens to active delegation?

If treaties carry over automatically, the new sovereign implicitly inherits obligations it hasn’t endorsed. If they’re erased, legitimate authority vanishes.

The adopted model was suspension followed by explicit ratification.

When a successor activates, all active treaties enter a suspended state. They remain visible in replay. They no longer authorize action. The new sovereign must ratify each one explicitly before it regains effect.

Suspended treaties do not count toward effective density. New grants are blocked until suspension is resolved. Expired suspended grants fall away automatically.

Delegation continuity is preserved without silent inheritance.

What This Means

Within the declared assumptions — a single sovereign, deterministic kernel, trusted observation channel, and a non-Byzantine environment — the internal structure is now closed.

There are no remaining unmodeled transitions inside that regime.

The system can:

Act only under explicit authority.

Refuse deterministically.

Amend its own law without proxy override.

Delegate authority in a bounded way.

Survive sustained churn and tightening constraints.

Operate under live stochastic inhabitation.

Rotate identity without breaking replay continuity.

That’s not hype. It’s a statement about structure.

What It Does Not Mean

It does not mean the system is adversarially hardened.

It does not solve key compromise recovery.

It does not defend against a malicious host falsifying observations.

It does not implement distributed consensus or Byzantine fault tolerance.

What has been built is a coherent sovereign substrate under explicit assumptions. The outer threat surface still exists. It just hasn’t been addressed yet.

Postscript

Sovereignty here is no longer tied to a single key. It’s tied to a lawful chain of custody. Law can evolve. Authority can be shared and withdrawn. Identity can rotate. All of it remains replay-verifiable and density-constrained.

The original question was whether sovereignty could be reduced to deterministic structure rather than informal convention.

The answer is yes.

The next question is how that structure behaves when the environment stops cooperating.