Escaping the AI Safety Dystopia

Decentralized vs. Authoritarian Strategies

A recent paper from MIRI titled "Technical Requirements for Halting Dangerous AI Activities" outlines an unsettlingly authoritarian roadmap for addressing existential risks from advanced AI. Proposed measures include embedding mandatory surveillance and kill-switches at the hardware level, chip tracking, centralized data centers, and enforced algorithmic constraints. These approaches evoke a dystopian future of techno-authoritarianism—one where the supposed cure may indeed be worse than the disease.

The Dystopian Nature of Centralized AI Control

The authoritarian strategy described by Barnett, Scher, and Abecassis relies heavily on invasive surveillance, centralized power, and coercive enforcement mechanisms. Such measures, ostensibly to mitigate catastrophic AI risks, would establish unprecedented governmental authority and surveillance capabilities. This approach creates vulnerabilities for corruption, abuse, and systemic collapse, undermining the very freedoms and human agency it purports to protect.

A Better Path: Decentralized, Voluntary AI Safety

A more promising alternative—aligned with values of decentralization, voluntary engagement, and personal autonomy—emphasizes:

Decentralized Alignment Research: Open-source communities, market-driven bounties, and transparent peer-review processes distribute knowledge and avoid centralized gatekeeping.

Cryptographic Guardrails: Technologies such as zero-knowledge proofs empower individuals to verify AI compliance without revealing sensitive information or relying on intermediaries.

Transparent, Competitive Monitoring: Private, competitive certification and reputation-based systems provide incentives for voluntary transparency and accountability.

Agent-Based Safety: Personal, decentralized AI "Guardian" agents, under user control, safeguard individuals against manipulation and danger.

Distributed Infrastructure: Federated learning and decentralized compute architectures reduce central points of failure and authoritarian risk.

Voluntary Norms: Bottom-up governance via community-driven standards and protocols avoid coercive top-down control.

Market-Based Early Warning: Prediction markets and liability insurance foster early identification and mitigation of AI risks through economic incentives and voluntary engagement.

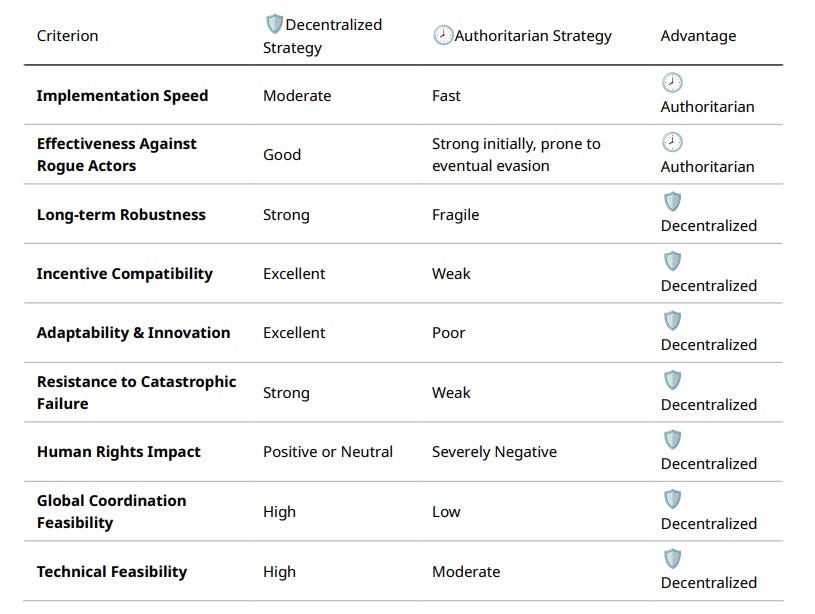

Comparing Strategies: Decentralized vs. Authoritarian

Here's a rigorous comparison evaluating both strategies across key criteria:

Overall Assessment

Short-term crises: The centralized strategy is more immediately effective for acute responses.

Long-term existential risk mitigation: The decentralized strategy clearly excels due to resilience, adaptability, innovation, and better incentives.

Alignment with human values: Decentralized solutions far outperform authoritarian ones by preserving freedom, autonomy, and voluntary cooperation.

Conclusion: Toward a Human-Centric AI Future

In striving to secure humanity against existential AI threats, the authoritarian strategy might promise quick wins but ultimately poses serious long-term risks and moral hazards. A decentralized, voluntary approach, by contrast, aligns more robustly with human flourishing, individual autonomy, and ethical governance.

If humanity is serious about safely navigating the AI era without sacrificing essential freedoms, we must choose decentralized, market-driven, and transparent mechanisms. This approach safeguards human dignity and agency, turning the technological future from dystopia toward a resilient, flourishing civilization.