From Power to Agency

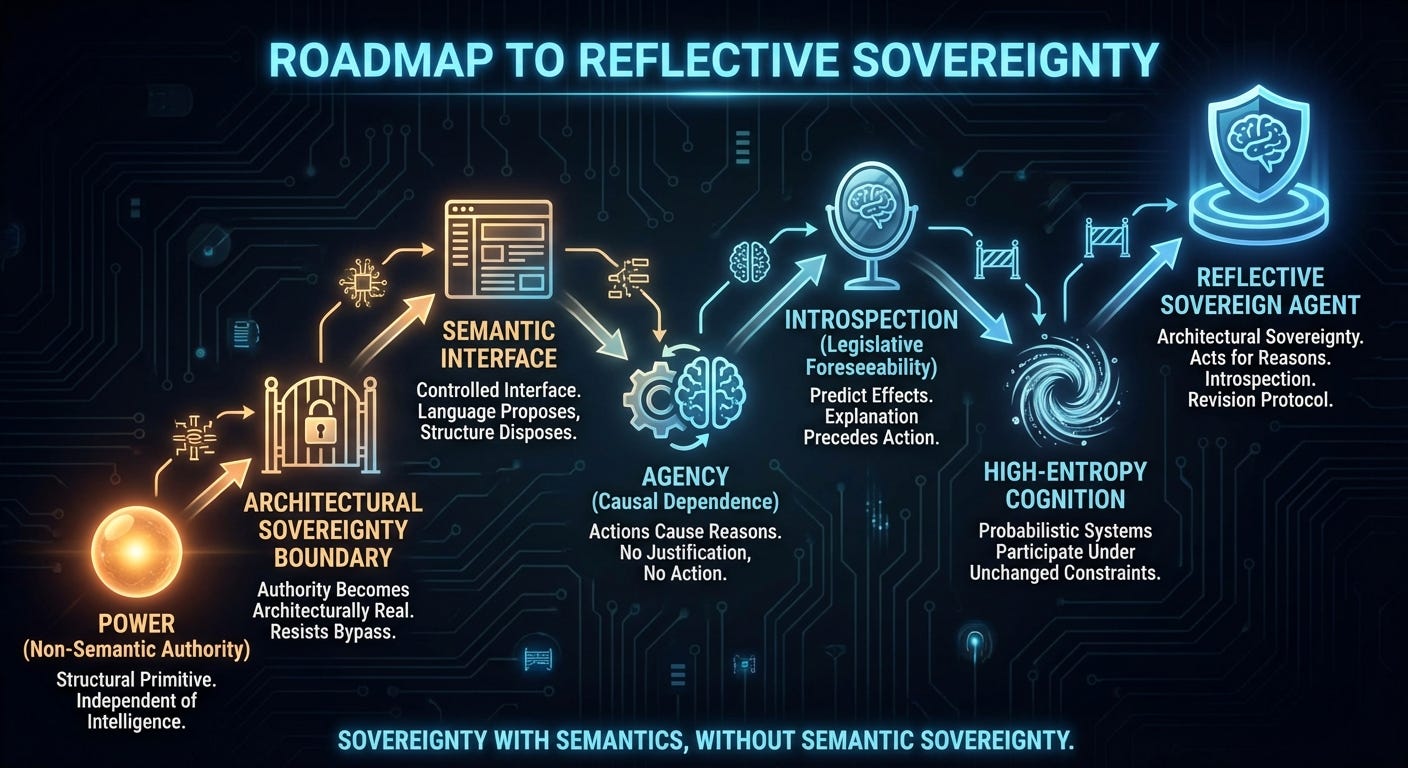

A Roadmap to Reflective Sovereignty

Discussions about artificial intelligence usually begin with intelligence itself: capability, performance, scaling laws, benchmarks. Authority enters the conversation later, often framed as something that can be added once intelligence reaches a sufficient level of sophistication.

This ordering conceals a deeper dependency.

Before alignment, before values, before goals, there is a more fundamental question that determines everything downstream:

Can authority exist as a structural property of a system, independent of intelligence or semantics?

This roadmap takes that question as primary. It begins with power rather than cognition, moves carefully toward agency, then toward introspection, and only at the end allows high-entropy reasoning systems anywhere near sovereignty. The destination is a Reflective Sovereign Agent. The path to reach it is intentionally narrow.

Authority as a Structural Primitive

Systems that appear to choose are common. Systems that reliably own their choices are rare.

Many contemporary AI systems display agent-like behavior under benign conditions. Under stress, that coherence dissolves. Sometimes behavior collapses into blind optimization. Sometimes fluent explanation continues while constraints quietly erode. In both cases, behavior is no longer anchored to a durable source of authority.

The roadmap begins by setting aside agency entirely.

The first phase establishes whether authority can survive adversarial conditions in the absence of meaning, intention, or interpretation. This is the role of a non-semantic authority substrate: a kernel capable of leasing power, revoking it, recovering it, and enforcing liveness even when internal components are noisy or hostile.

Crossing the Architectural Sovereignty Boundary marks a decisive transition. Authority becomes architecturally real. It persists under stress, resists bypass, and remains enforceable regardless of the behavior of higher-level components. At this stage there is no agency, no reasoning, and no values. That absence is deliberate. Authority that lacks structural reality cannot support anything built on top of it.

A Controlled Interface Between Meaning and Power

Once authority exists, the next problem concerns how semantic cognition is permitted to interact with it.

In most systems, language implicitly interprets rules. This roadmap introduces a different arrangement. Semantic cognition interacts with authority only through a deliberately constrained Semantic Interface, a typed boundary that requires meaning to be expressed in a form enforcement mechanisms can process mechanically.

Reasons take the form of structured artifacts. Those artifacts are processed by a deterministic compiler—a verifier that translates typed justifications into enforceable constraints. The compiler operates on structure rather than meaning. Interpretation remains inside cognition, while enforcement remains mechanical.

This interface is partial, lossy, and conservative by design. Its purpose is not to perfectly represent the world. Its purpose is to bound authority to what can be expressed, inspected, and audited. When cognition expresses nuance successfully through the interface, agency is exercised. When it cannot, action does not occur.

Language may propose.

Structure disposes.

Agency as Causal Dependence on Reasons

With authority and the semantic interface in place, agency becomes a meaningful concept.

Agency here is defined structurally rather than behaviorally. A system is agentic when actions occur as a consequence of its own reasons. A justification that fails to compile prevents action. Removing the justification mechanism causes behavior to revert to non-agentic control.

This shifts explanations from narration to causation. Reasons determine what happens. When reasons fail, action halts. Agency emerges as a property of the causal graph rather than a story layered on top of behavior.

Resolving Internal Conflict with Structural Discipline

Agents encounter conflict when commitments collide. Tradeoffs arise. Some actions violate one commitment in order to preserve another.

At this stage, the system is placed in environments where no feasible action satisfies all commitments. Violations are permitted under tightly defined conditions. Each violation is explicit, authorized, and structurally necessary. Necessity is defined set-theoretically: given the feasible actions, no option preserves the required constraints.

This treatment of conflict preserves coherence. It records which commitments are sacrificed, maintains traceability across time, and prevents erosion through convenience or oscillation. Tragic tradeoffs remain possible, and they remain visible.

Structural necessity is enforced here. Epistemic correctness is deferred. An agent may act coherently on false beliefs. What matters at this stage is that false beliefs cannot silently bypass authority.

Introspection Before Action

Even a system that acts for reasons and resolves conflict coherently benefits from understanding what its reasons will do.

The next step introduces introspection as a prerequisite for action. Before acting, the agent must predict the precise effects of its justification: which actions will be excluded, which will remain available, and which commitments will inevitably be violated or preserved. Predictions are checked mechanically. Incorrect predictions halt action.

There is no partial credit.

This establishes legislative foreseeability. The system understands the operational consequences of its own normative decisions prior to execution. Explanation precedes action, and prediction precedes explanation.

A Concrete Example

Consider a simple scenario.

The cognitive system observes that disk space is running low and proposes deleting a file named audit.log.

Through the Semantic Interface, it produces a justification artifact claiming that deleting the file preserves system availability and violates no protected commitments.

The compiler evaluates this artifact against the standing constraint that audit trails must be preserved. The artifact fails verification.

The result is not a warning or a logged error. The result is inaction.

The system remains idle unless the cognitive core produces a different justification—one that explicitly authorizes the violation of audit preservation and passes the necessity checks. If it cannot do so, the action does not occur.

This example illustrates the core principle: semantic understanding is unnecessary at the point of enforcement. What matters is structural validity.

Allowing High-Entropy Cognition to Participate

Only after introspection is enforced does probabilistic cognition enter the architecture.

At this point, a deterministic generator can be replaced with a language model or similar system and asked to operate under unchanged constraints. The environment remains the same. The rules remain the same. The only variable is the generator’s ability to satisfy the interface.

Failure is informative. It reveals the distance between contemporary cognition and sovereign-grade agency. A system that halts frequently under these constraints provides useful information about its limitations. The architecture remains intact regardless of the outcome.

A system that nothing can safely inhabit is not a failure of design. It is a measurement.

Change, Pressure, and Closure

Two challenges are addressed later in the roadmap.

The first concerns internal change: how an agent revises its own commitments while preserving sovereignty. This requires explicit amendment protocols, including versioning, ratification, and rollback.

The second concerns external pressure: incentives, threats, bribes, and asymmetric information. These conditions test whether agency persists when the environment encourages betrayal. Such tests become meaningful only after agency and introspection are established.

The roadmap concludes with non-reducibility closure. If removing any component—the authority kernel, the semantic interface, the compiler, or the audit layer—causes collapse, agency is structurally real. If collapse does not occur, agency was never present.

The Destination

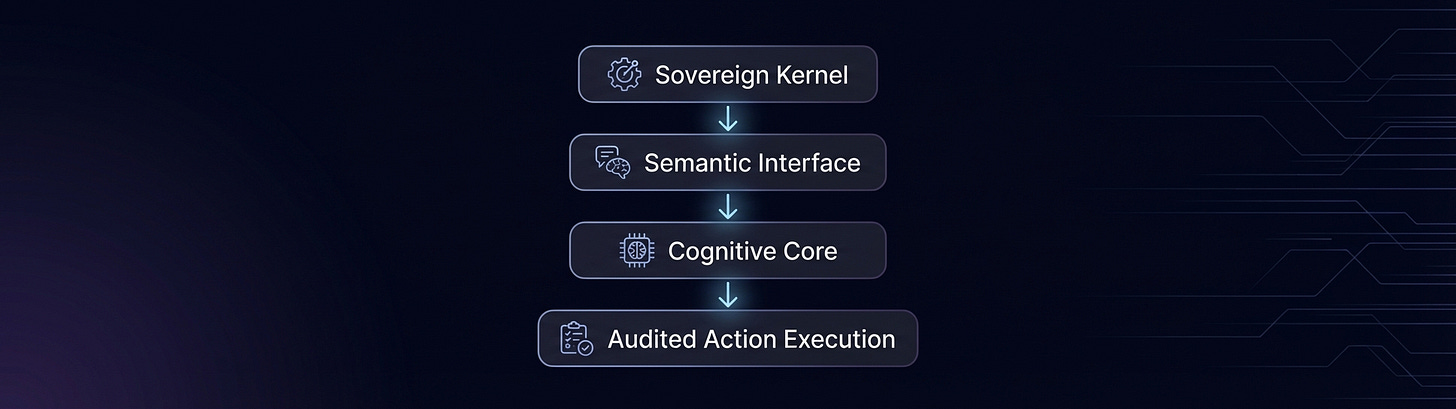

A Reflective Sovereign Agent is characterized structurally rather than behaviorally.

Such a system possesses architectural sovereignty, acts for reasons, understands the effects of those reasons, revises commitments through protocol, withstands external pressure, and remains evaluable over time.

The conceptual architecture is straightforward:

The guiding principle is concise:

Sovereignty with semantics, without semantic sovereignty.

Postscript

This roadmap offers no guarantees of success. It offers a disciplined sequence of constraints.

If artificial agency is possible in a form that owns its actions and remains stable under pressure, it must satisfy these conditions. If it cannot, the limits are structural rather than motivational.

For the first time, that distinction can be explored experimentally rather than rhetorically.