Structure Is Not Salvation

Authority, Alignment, and the Limits of Architectural Confidence

Anthony Aguirre recently wrote:

But:

- If you and your AI system have finally cracked how quantum interpretation really works;

- If you've cracked quantum gravity;

- If you've attained an awesome new insight into the deep structure of the world that nobody else has;

- If you've cracked AI alignment...

You didn't.

It is a blunt formulation. It is also statistically grounded. Problems that have resisted generations of experts rarely fall to a single clever reframing or a productive afternoon with a powerful tool. Aguirre’s warning is not about any one proposal. It is about the ease with which internal coherence can be mistaken for foundational breakthrough.

That warning applies to my own work.

Over the past few months, I’ve published more than ninety technical papers in collaboration with an AI system under a research program called Axionic Agency. These are not peer-reviewed journal articles; they are formal technical notes, specifications, experimental reports, and architectural drafts produced as part of an iterative research program. The speed and volume are possible precisely because AI accelerates drafting, formalization, and cross-referencing. That acceleration is part of the experiment. It is also a source of epistemic risk.

Axionic Agency explores agency, authority, and alignment through what I call a deterministic sovereign kernel architecture. It is sustained, systematic, and structurally ambitious. That makes it essential to distinguish carefully between what has been demonstrated and what remains conjectural.

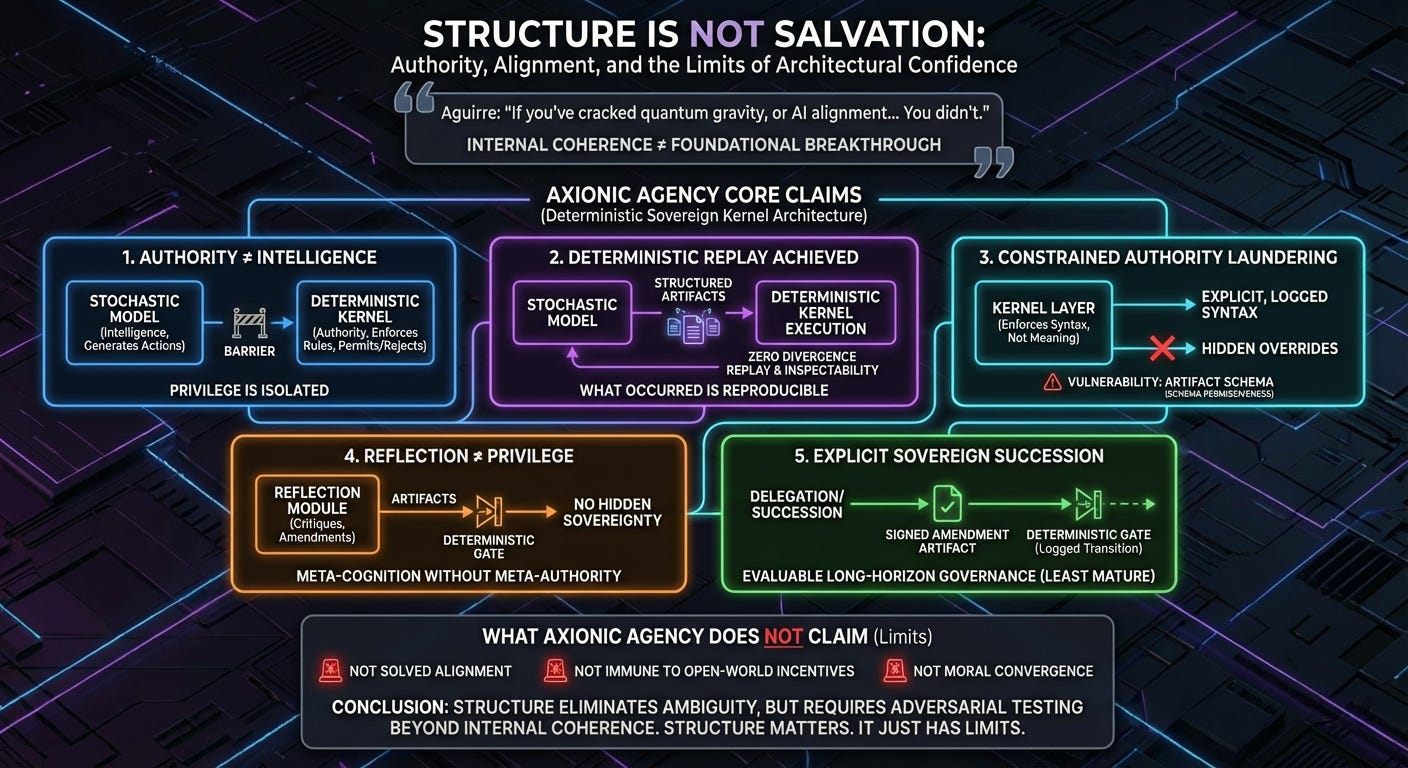

When compressed to its load-bearing results, the program makes five primary claims.

1. Authority Can Be Structurally Separated from Intelligence

In many AI systems, cognition and control are entangled. The component that generates actions effectively determines what happens.

Axionic design separates those roles. A stochastic model generates candidate actions and justifications. A deterministic kernel enforces execution rules through explicit, non-semantic gates. The flow is fixed: justify, compile, mask, select, execute. The model proposes. The kernel permits or rejects.

This is not a claim that permitted actions are aligned with human values. It is a claim that privilege can be isolated. Intelligence generates artifacts. Authority resides elsewhere.

2. Deterministic Replay Is Achievable with Stochastic Models

Large language models are probabilistic systems. In most deployments, rerunning the same interaction produces different trajectories. That undermines auditability.

Axionic systems canonicalize model outputs into structured artifacts before execution. Once those artifacts enter the deterministic kernel, the overall system can be replayed with zero divergence under controlled conditions. The model remains stochastic internally; the execution substrate does not.

Replayability is not correctness. It is inspectability. It ensures that what occurred can be examined and reproduced exactly.

3. Authority Laundering Can Be Constrained at the Kernel Layer

Alignment proposals often rely on policy interpretation. Interpretation creates a path for hidden power. If a system can reinterpret its own rules, it can silently expand its authority.

Axionic architecture removes semantic arbitration from the enforcement layer. Authority transformations must be explicit, artifact-bound, and logged. There are no hidden overrides inside the kernel.

However, this does not eliminate all risk. The kernel enforces syntax, not meaning. The safety of the system therefore depends critically on the artifact schema and the narrowness of the action surface. If the schema is too permissive, malicious or misaligned intent can be embedded within syntactically valid artifacts. The architecture reduces authority laundering by constraining what can be expressed and executed; it does not magically infer semantic intent.

The translation boundary between probabilistic semantics and deterministic execution remains a vulnerability. The attack surface is smaller, but it is not zero.

4. Reflection Does Not Require Privilege

Discussions of recursive self-improvement often assume that reflection implies meta-authority. If a system can critique or revise itself, it eventually acquires control over its own rules.

Axionic design separates reflection from execution. Reflection modules generate proposals, amendments, and critiques as artifacts. Those artifacts pass through the same deterministic gate as any other action. Reflective reasoning does not grant execution rights.

This establishes a structural claim: meta-cognition can exist without hidden sovereignty. Whether that suffices for long-term stability is a further question.

5. Sovereign Succession Can Be Made Explicit and Evaluated

Authority over time is as important as authority in the moment. Systems drift. Governance structures persist while real control shifts elsewhere.

Axionic work attempts to formalize delegation and succession as discrete, evaluable transitions. For example, a successor cannot alter kernel rules or modify authority constraints without producing a signed amendment artifact that is evaluated under the same deterministic gate as any other action. Authority transfer must be explicit and logged.

This moves beyond single-runtime containment toward long-horizon governance. It is also the least mature of the claims. Constraining authority within a bounded execution substrate is tractable. Preserving evaluability under sustained adversarial and economic pressure remains an open challenge.

What Axionic Agency Does Not Claim

The program does not claim that alignment is solved. It does not claim immunity to open-world incentives, adversarial ecosystems, or strategic economic manipulation. It does not claim that structural constraints guarantee moral convergence.

Its strongest results concern architecture: bounded authority, deterministic replay, and explicit privilege transitions within a defined substrate. Broader alignment success would require evidence beyond internal coherence and controlled experiments.

Architecture Has Limits

AI collaboration dramatically accelerates articulation and structural refinement. It enables rapid iteration across design spaces that would otherwise be slow to traverse. It also increases the risk that density and momentum are mistaken for external validation.

Structure can eliminate classes of failure. Determinism can eliminate ambiguity. Explicit authority boundaries can eliminate silent privilege escalation inside a constrained system. None of these guarantees alignment in a complex, adversarial world.

Axionic Agency demonstrates that authority in intelligent systems can be bounded, auditably replayed, and made non-launderable within a deterministic sovereign kernel architecture. That is a concrete and defensible result.

Whether those constraints remain robust under open-world adversarial pressure is not yet established.

Aguirre’s warning is therefore not something to dismiss. It is a calibration device. It reminds us that structural elegance is not the same as salvation, and that foundational claims require adversarial testing beyond internal coherence.

Structure matters. It just has limits.