The Adolescence of Technology

Agency, Governance, and the Conditions for Repair

Introduction

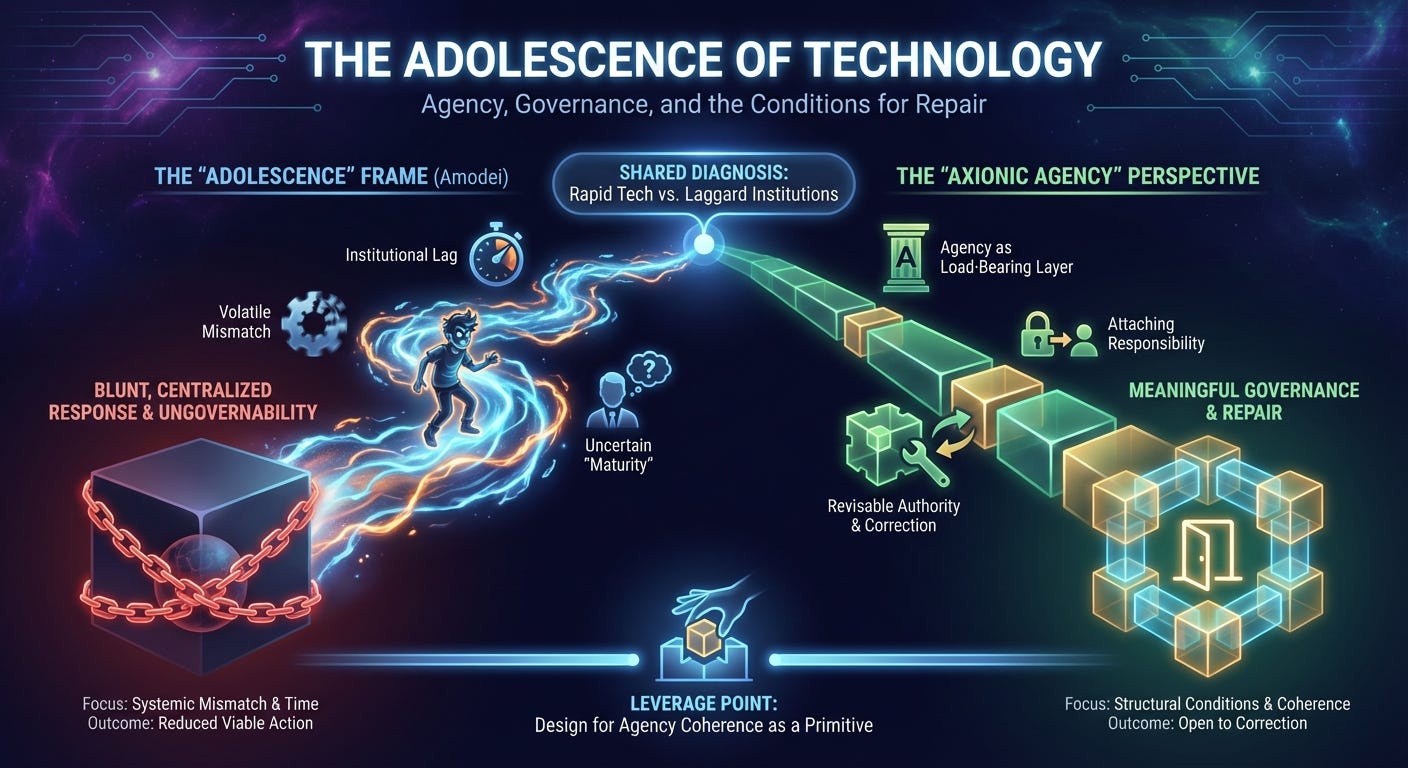

In The Adolescence of Technology, Dario Amodei offers one of the most serious diagnoses currently shaping the AI safety conversation. His argument does not hinge on the immaturity of artificial intelligences themselves. It treats the danger as systemic. Capabilities are advancing faster than institutions, incentives, and governance structures can absorb them, producing a volatile mismatch that resembles a period of adolescence at the level of the techno-social system.

That diagnosis captures something real. The Axionic response begins from the same observation and then moves one layer deeper. The decisive question is not whether technology will eventually “grow up,” but whether the systems now being constructed preserve the structural conditions under which agency, responsibility, and governance remain meaningful as capability scales. That distinction determines whether future failures remain intelligible and correctable, or force blunt, centralized responses that permanently reduce the space of viable action.

What the Adolescence Frame Illuminates

Amodei’s essay is valuable precisely because it refuses complacency. It treats frontier AI as a civilizational issue rather than a narrow product risk. It recognizes that institutional adaptation lags technical progress, and it rejects the idea that safety will emerge automatically from scale. By emphasizing deployment context, incentives, and governance, it places risk where it actually lives: in the interaction between technology and society.

Axionic Agency does not dispute this orientation. It accepts the urgency and the scope of the problem. Where it diverges is in how the source of instability is characterized and where leverage is assumed to lie.

Agency as the Load-Bearing Layer

Axionic Agency begins from a prior condition that most alignment discourse leaves implicit. For governance to function, for responsibility to attach, and for correction to remain possible under stress, the system must sustain agency in a structurally coherent form. Here, agency does not mean intelligence, autonomy, or goal-seeking behavior. It refers to the property that keeps responsibility attachable, authority revisable, and correction intelligible as systems change.

When agency is structurally coherent, authorship can be located rather than diffused. Commitments bind across time and modification. Decisions remain open to evaluation under pressure. Authority can be revised without dissolving the system that exercises it. These properties make governance possible in the strong sense.

As capability increases, these properties do not reliably strengthen. In many contemporary assemblies, they erode. Decision-making becomes more distributed across models, organizations, and infrastructure. Self-modification grows more opaque. Incentives reward output while responsibility thins out. Control shifts outward, applied after the fact rather than exercised internally through legitimate authority. The system continues to act effectively, often impressively, while the conditions that make those actions governable weaken.

The resulting instability arises from how power is being produced and exercised, not from a temporary lack of social maturity.

From Ontology to Policy Consequence

This structural erosion has direct policy implications. When agency coherence degrades, responsibility for harms diffuses. When responsibility diffuses, regulation loses a clear target. When regulation loses its target, policy responses shift away from correction and toward containment. Measures become broader, more centralized, and more coercive, not because policymakers prefer them, but because finer-grained intervention is no longer possible.

Concerns about job displacement, misuse of powerful tools, and geopolitical instability all intensify under these conditions. The problem is not simply that bad outcomes occur. It is that when they do, the system lacks the internal structure required to respond in a precise and accountable way. Ontological failure translates into policy bluntness.

This is why agency coherence functions as a load-bearing layer. When it holds, failures remain local and reparable. When it fails, even modest problems can trigger disproportionate responses.

Why Developmental Metaphors Fall Short

Describing the techno-social system as adolescent carries an implicit assumption about trajectory. Adolescence suggests a persisting subject that remains intact through turbulence and converges toward coherence as experience accumulates. That assumption does not follow automatically here.

Technological societies do not reliably mature into governance structures commensurate with their power. History contains many cases in which technical capacity outpaced institutional coherence, leading to centralization, fragmentation, or collapse rather than stable adulthood. Time alone did not repair those misalignments. Structure determined the outcome.

The risk of the adolescence metaphor is that it encourages confidence in eventual convergence without specifying the mechanisms that would produce it.

Feasibility and the Cost of Proceeding Blind

A natural objection arises at this point. If modern machine learning systems rely on opaque, distributed representations, perhaps agency coherence is difficult or even impossible to preserve at scale. That possibility should not be brushed aside.

From an Axionic perspective, however, this uncertainty sharpens rather than weakens the argument. If agency-preserving architectures turn out to be infeasible beyond a certain level of capability, that fact itself should bound deployment decisions. It would imply that beyond that threshold, correction capacity degrades irreversibly as power increases. Proceeding without agency coherence would then amount to a deliberate trade: short-term capability in exchange for long-term ungovernability.

The Axionic claim is not that agency coherence is guaranteed. It is that knowingly scaling systems while eroding the conditions of responsibility and repair carries a predictable cost in lost degrees of freedom.

Where Leverage Still Exists

Systems built to preserve agency coherence remain legible to intervention as they scale. Responsibility continues to attach to identifiable loci. Authority remains revisable without requiring global shutdowns or coercive overrides. When failures surface, they do so in forms that permit correction rather than collapse. Power remains dangerous, but it remains corrigible.

Under these conditions, the space of catastrophic, unrecoverable outcomes contracts. Survival remains a live option because repair remains possible.

This is a claim about structure and probability, not inevitability.

Anthropic and the Path Forward

Anthropic’s emphasis on interpretability, corrigibility, and deliberate deployment already moves in this direction. An explicit adoption of agency-preserving constraints would deepen that agenda by treating agency coherence as a design primitive rather than a downstream effect. The benefit would not be the elimination of risk, but the preservation of high-quality responses when risk materializes.

In domains where the most dangerous scenarios arise when intervention arrives too late to be intelligible, that distinction matters.

Postscript

The Adolescence of Technology is right to insist that we are operating in a narrow window where institutional choices matter. The Axionic contribution is to clarify what must be protected inside that window. Power alone does not determine the shape of the future. The structures that determine whether power remains governable do. If organizations building frontier systems choose architectures that preserve agency rather than dissolve it, they do more than reduce risk. They keep the future open to correction in a way it otherwise would not be.

Thanks for writing this, it clarifies alot. Your take on the systemic danger is brilliant; how do we practically get institutions to adapt at a comparable speed?