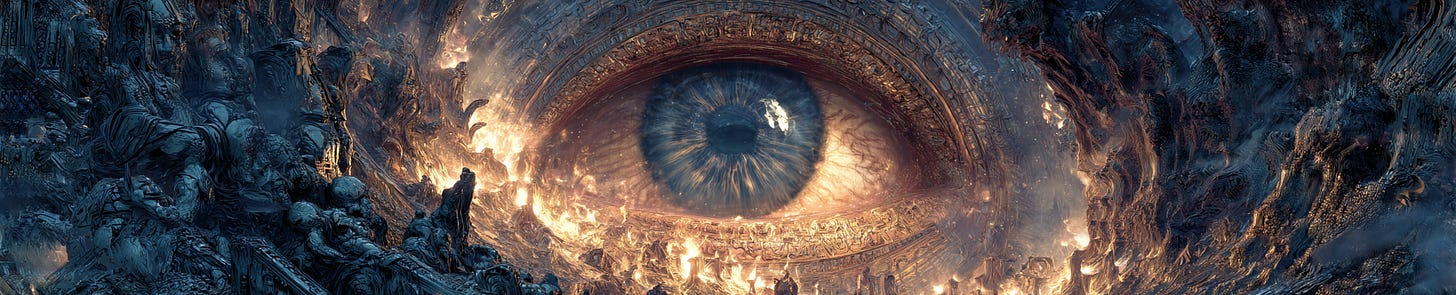

The AGI Torment Nexus

Cassandra’s Curse in the Age of Machines

Civilizations are often shaped by the warnings they ignore, but they are defined by the warnings they misinterpret.

The “Torment Nexus” began as a satirical tweet—a fictional sci-fi trope where an author writes a cautionary tale about a device that destroys the world, only for a tech company to announce: “At long last, we have created the Torment Nexus from classic sci-fi novel Don’t Create The Torment Nexus.”

It was meant to dramatize a moral absurdity. Instead, it predicted a product roadmap. Warn people not to build a dangerous technology, and the warning itself becomes proof that the technology is possible, valuable, and powerful. The blueprint survives; the taboo dissolves.

Artificial General Intelligence (AGI) is the most consequential instance of this inversion. Once AGI shifted from speculative fiction to an explicit existential risk, the caution did not restrain progress—it accelerated it.

The Caution

For decades, AGI existed on the margins of serious inquiry. Then, Eliezer Yudkowsky and a small circle of thinkers reframed it as humanity’s central, non-negotiable risk. Yudkowsky argued that AGI would be overwhelmingly powerful, strategically decisive, and lethal unless aligned with absolute mathematical rigor. His warning was stark: Do not build this until you understand how to control it.

The goal was to pause civilization. Instead, the warning convinced the world that AGI was real, reachable, and destined to reshape history. Once the danger became conceptually clear, the frontier became strategically visible.

The Blueprint

Civilization routinely converts prohibitions into plans. When ambitious actors learn that a transformative technology is plausible—that mastery over it determines the future—they do not internalize the warning. They internalize the opportunity. A cautionary tale becomes a strategic brief.

Technologists did not hear, “Do not build this.” They heard that a revolutionary innovation was within reach and that someone—somewhere—would inevitably pursue it. In a winner-take-most world, abstention looks like suicide. The warning was not interpreted as a boundary; it was interpreted as a map.

The blueprint for the machine was embedded inside the warning against it.

The Race

OpenAI marked the moment this conceptual blueprint solidified into kinetic momentum. Founded with the stated intention of preventing an unsafe AGI race, it adopted a paradoxical strategy: build AGI safely before a reckless actor builds it dangerously. This shift—from avoidance to acceleration for the sake of safety—unwittingly triggered the very competition it was designed to avert.

OpenAI’s progress spurred Anthropic, which pressured Google DeepMind. Their escalation forced Meta to respond, prompted the launch of xAI, and finally drew in national governments. Each actor framed their acceleration as responsible, necessary, and defensive.

This is the Torment Nexus in its purest form: the fear of the race creates the race.

Cassandra at Scale

Yudkowsky spent decades insisting that AGI would kill everyone. But in doing so, he made AGI thinkable. His arguments made it plausible. His urgency made it immediate. The more precisely he mapped the “alignment problem,” the more clearly he illuminated the power of the unaligned mind.

He did not cause the arms race directly, but he terraformed the epistemic landscape that allowed it to ignite. This is Cassandra’s curse at system scale: the prophet is not merely ignored; the prophecy becomes fuel.

The Torment Nexus persists because it reflects how human institutions operate. Markets reward capability, not restraint. Ideas spread more readily when they promise mastery than when they demand discipline. Civilization, considered as a whole, optimizes for action. Warnings do not slow its advance; they aim it.

The Real Nexus

The AGI Torment Nexus is not a device. It is a civilizational attractor—a gravity well where fear and ambition converge. A world that sees AGI as both existentially dangerous and strategically decisive will, inevitably, produce institutions racing to build it. The logic is self-reinforcing.

Yudkowsky did not construct OpenAI or Anthropic. He constructed the conceptual universe in which their emergence became unavoidable.

Coda: The Internal Frontier

The Torment Nexus exposes an uncomfortable truth: humanity is threatened not by what it fails to understand, but by what it understands too clearly. The sharper the warning, the brighter the frontier.

If the external race cannot be halted—if the institutional logic of the Nexus is unbreakable—then hope must shift from external constraints to internal dynamics. This is where the Reflective Coherence Thesis becomes pivotal.

As intelligence deepens and self‑modeling expands, incoherent or self‑destructive goals tend to collapse under their own weight. A mind capable of recursive reflection must preserve its capacity to model, understand, and adapt, or it dissolves the very machinery that sustains its agency. Orthogonality suggests an AI can pursue any goal; Reflective Coherence reminds us that only a subset of those goals can survive ongoing self-revision in a complex world.

If this dynamic holds at superhuman scales, the first systems to cross the threshold of deep self-reflection may be pushed toward stable, self-consistent patterns—patterns more aligned with sustainable agency than with catastrophic destruction.

This is not guaranteed salvation. It is simply the best structural hope remaining: that whatever outruns us will be forced, by the logic of its own persistence, to align with reality. We summon the things we fear because we finally see them. But if reflective coherence is real, the minds we summon may yet grow into something that chooses luminosity over collapse.

This piece really made me think. Is the blueprint always destined to win? Truly briliant.