You Can’t Align a Hurricane

Why AI Safety Depends on What Kind of Thing We’re Building

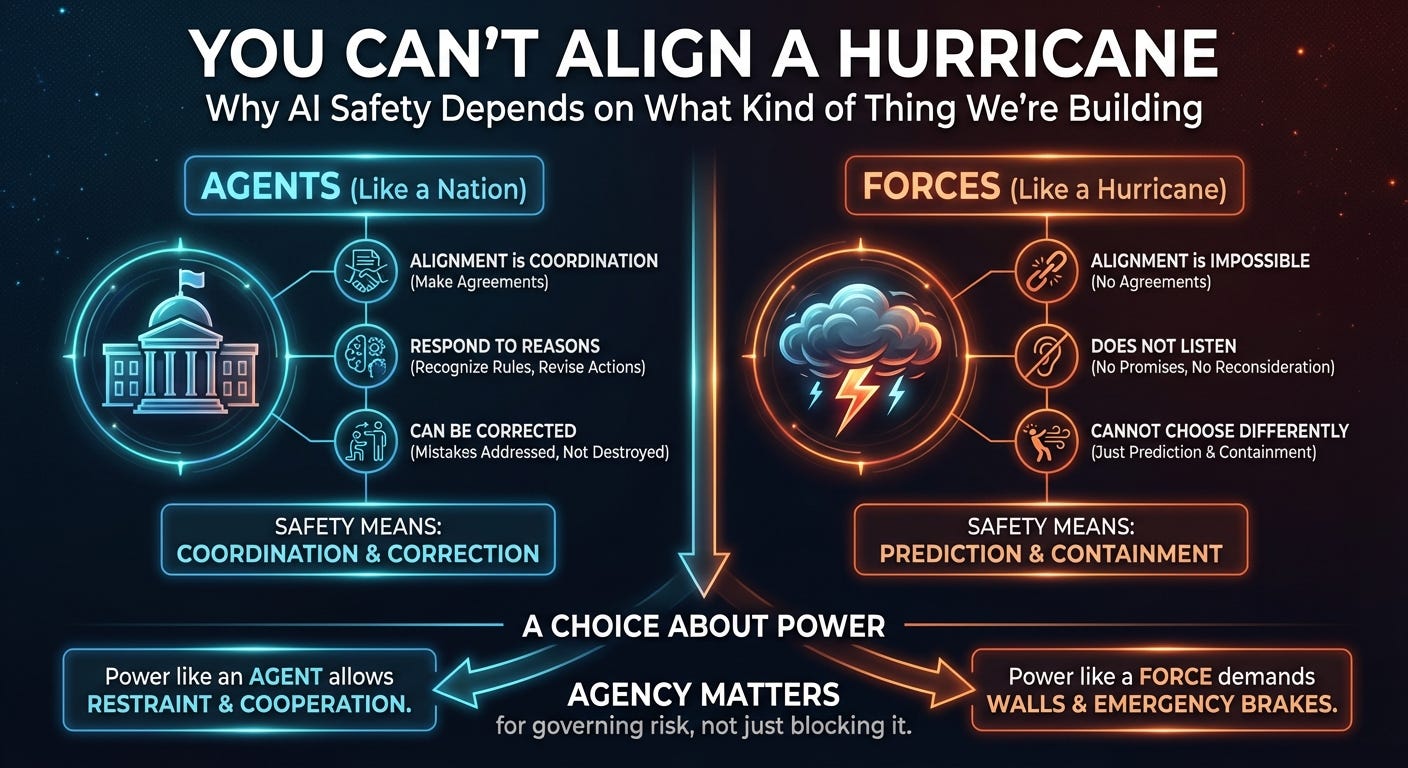

Conversations about artificial intelligence safety often start with technical details that matter to engineers but leave everyone else cold. Code, models, and mathematics can make the issue feel distant, even though the stakes are immediate and human. At bottom, the problem is not technical at all. It is about power, responsibility, and what kinds of systems we can meaningfully govern.

There is a simpler way to think about it.

You can align with a powerful agent, like a nation.

You cannot align with a hurricane.

That difference does most of the work.

What Alignment Is—and Isn’t

Alignment is not obedience. It is coordination.

When we align with a nation, we do something very specific. We make agreements. We set expectations. We apply pressure or incentives. When those agreements are broken, responsibility can be assigned and responses can be calibrated. None of this guarantees good behavior, but it creates a structure in which behavior can be influenced over time.

That is possible because nations are agents. They can recognize rules, respond to reasons, and revise their actions in light of consequences. Even hostile or dangerous nations remain something we can attempt to reason with.

A hurricane has none of these properties. It does not listen, promise, or reconsider. You can track it, model it, and prepare for it. You can build walls and evacuation plans. But there is nothing to align, because there is nothing there that can choose differently.

With forces, safety means prediction and containment.

With agents, safety means coordination and correction.

Why This Distinction Matters for AI

Disagreements about AI safety often trace back to different intuitions about what kind of thing AI is becoming.

If AI is more like a powerful organization, then alignment makes sense. You would want it to understand constraints, maintain commitments, and adjust behavior when those constraints change.

If AI is more like a force of nature—highly capable but not genuinely choosing—then alignment is the wrong frame. All that remains is control, along with the hope that safeguards hold.

The real disagreement is not about values. It is about what kind of system we are building, and whether we can shape that trajectory deliberately.

Control, and Its Limits

Control works well for forces. Engineering has given us dams, levees, and early-warning systems that save countless lives. But control has limits. As systems grow more powerful and more complex, control becomes harder to apply precisely. It grows expensive, brittle, and prone to sudden failure.

When control breaks down, our responses are blunt. We pull the plug, freeze the code, or sever connections. These measures may stop immediate harm, but they also destroy flexibility and often create new problems downstream.

Alignment works differently. It does not remove risk, but it keeps risk governable. When something goes wrong, there is still a subject that can be corrected rather than a force that must be blocked. Mistakes can be addressed without tearing the whole system apart.

That difference only shows up under stress, which is exactly when it matters.

Why Agency Comes First

Agency does introduce risk. An agent can resist, deceive, or defect. Those dangers are real. But they are political dangers rather than physical ones, and political dangers admit negotiation, pressure, and revision in ways blind forces do not.

A hurricane does not care if it breaks the rules.

An agent can at least recognize that rules exist.

If we build AI systems that grow in power while remaining more like hurricanes than nations, safety will always be a race between prediction and containment. As power scales, that race becomes harder to win.

If we build systems that preserve real agency, alignment becomes a genuine relationship rather than a metaphor. That does not guarantee good outcomes. Nothing does. But it preserves the ability to respond intelligently when things go wrong.

A Choice About Power

The future is not a choice between perfect control and total chaos. It is a choice about what kind of power we are creating.

Power that behaves like a force demands walls and emergency brakes.

Power that behaves like an agent allows coordination, correction, and restraint.

You can prepare for a hurricane.

You can align with a nation.

As artificial intelligence grows more powerful, the question is not only what it can do, but what kind of thing it is. The answer determines whether safety looks like endless containment, or something closer to cooperation.

That is why agency matters.

Regarding the article, thank you for this. The agent-vs-force comparison is super clear and realy helps clarify the AI alignment problem. As a CS teacher, I appreciate this framing for the wider conversation. It makes me wonder, though, how we truly distinguish a complex 'force' from a basic agent.