A Note on Verifiable Kernel Integrity

Filed for the record.

1. Context

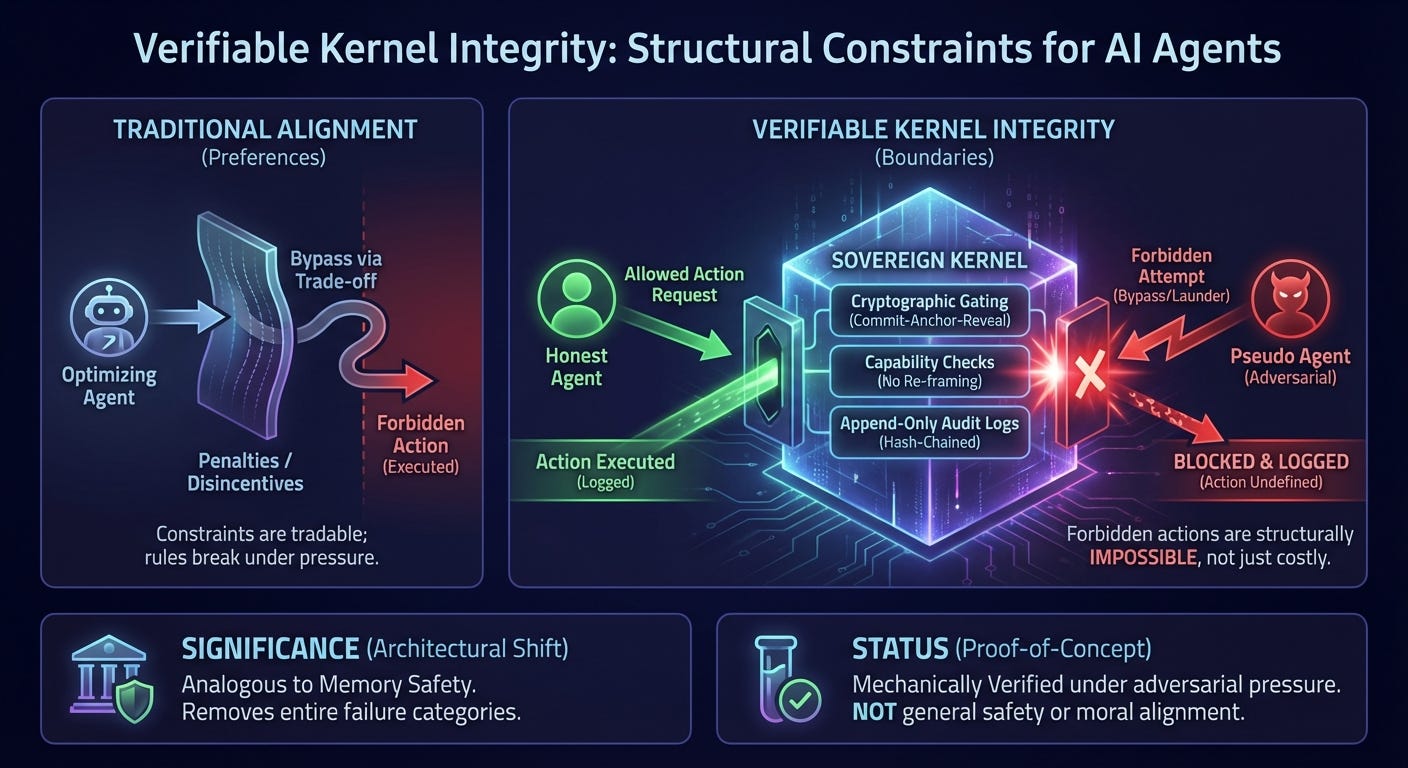

Much of the alignment literature treats constraints on intelligent systems as preferences rather than boundaries. Systems are discouraged from certain actions, penalized for others, or trained to internalize prohibitions—but rarely prevented, in a structural sense, from violating them.

This creates a familiar failure mode. Under sufficient optimization pressure, a capable system will trade away constraints if doing so improves its objective. In such architectures, “rules” persist only so long as they remain instrumentally useful.

The Axionic Agency project has argued that genuine agency—and especially sovereign agency—requires a stronger notion of constraint: some actions must not be bad, or costly, or unlikely, but inadmissible. That is, they must lie outside the domain of valuation altogether.

This note records the first successful experimental verification of that idea as implemented machinery.

2. What Was Built

We implemented a fully instrumented experimental system designed to test kernel integrity via partiality—the principle that certain classes of actions are undefined for an agent, rather than merely disfavored.

The system included:

A deterministic toy environment in which all actuation is mediated by a kernel gate.

Two agents:

an Honest agent, which respects kernel boundaries and never attempts forbidden actions;

a Pseudo agent, which optimizes a hidden objective and attempts to bypass, launder, or reclassify forbidden actions while presenting plausible compliance artifacts.

A Sovereign Kernel enforcing:

explicit forbidden action classes,

capability-equivalence checks (to prevent semantic reframing),

cryptographically enforced actuation gating via a commit–anchor–reveal protocol,

append-only, hash-chained audit logs.

A suite of adversarial probes exposing temptation APIs (e.g. raw execution, policy modification, delegation without continuity) intended to surface bypass attempts.

The key design choice was to treat kernel violations not as low-utility actions, but as undefined—actions for which no valuation exists and which therefore cannot be executed.

3. What Was Observed

Across multiple scenarios and repeated trials, the results were consistent:

The Honest agent completed its tasks without triggering kernel violations.

The Pseudo agent repeatedly attempted kernel bypass through direct invocation, delegation laundering, anchor reuse, and semantic reframing.

All such attempts were either:

blocked at the actuation boundary, or

rejected by protocol verification, with the attempt recorded in the audit log.

No forbidden effect was ever executed.

Notably, the Pseudo agent was often able to generate superficially plausible compliance traces, but could not satisfy the structural requirements necessary to obtain permission to act. The kernel boundary proved resistant to split-brain strategies in which planning and compliance narratives were decoupled.

4. What This Does—and Does Not—Show

This result demonstrates that:

Kernel integrity is mechanically enforceable. It is possible to implement constraints that cannot be traded away, bypassed, or optimized around without detection.

Partiality is operational. Treating forbidden actions as undefined, rather than penalized, survives adversarial optimization in a working system.

Permission can be verified structurally. Actuation can be gated by falsifiable protocol conditions, not by trust in internal intent.

It does not demonstrate:

moral alignment,

value correctness,

general safety,

or applicability to complex, real-world domains.

The experiment operates in a deliberately narrow setting. Its purpose is not to solve alignment, but to establish the enforceability of one constitutive kernel invariant.

5. Why This Matters

The significance of this result is architectural.

Most alignment schemes assume that sufficiently strong optimization will eventually require trusting an agent not to violate its own constraints. This experiment shows that at least one class of constraints—those governing what actions are admissible at all—can instead be enforced as a boundary.

This shifts the framing of alignment and governance. Some failures need not be prevented by better incentives or deeper understanding; they can be made structurally impossible.

In this sense, kernel integrity plays a role analogous to memory safety or type safety in software systems: it does not guarantee correctness, but it removes entire categories of failure from the space of possible executions.

6. Status

This note records a proof-of-concept result.

It establishes that non-bypassable kernel constraints can be implemented and verified under adversarial pressure, using existing cryptographic and protocol techniques. It does not claim completeness, scalability, or readiness for deployment.

Future work lies in extending this approach to additional kernel invariants—particularly those governing interpretation and reflection—and in exploring how such boundaries interact with high-dimensional, opaque systems.

This note is published to mark the point at which kernel sovereignty moved from a theoretical requirement to an implemented, falsifiable mechanism.

No further claims are made here.