Beyond Vingean Reflection

Alignment Without Prediction, Trust, or Behavioral Guarantees

1. The Vingean Reflection Problem Revisited

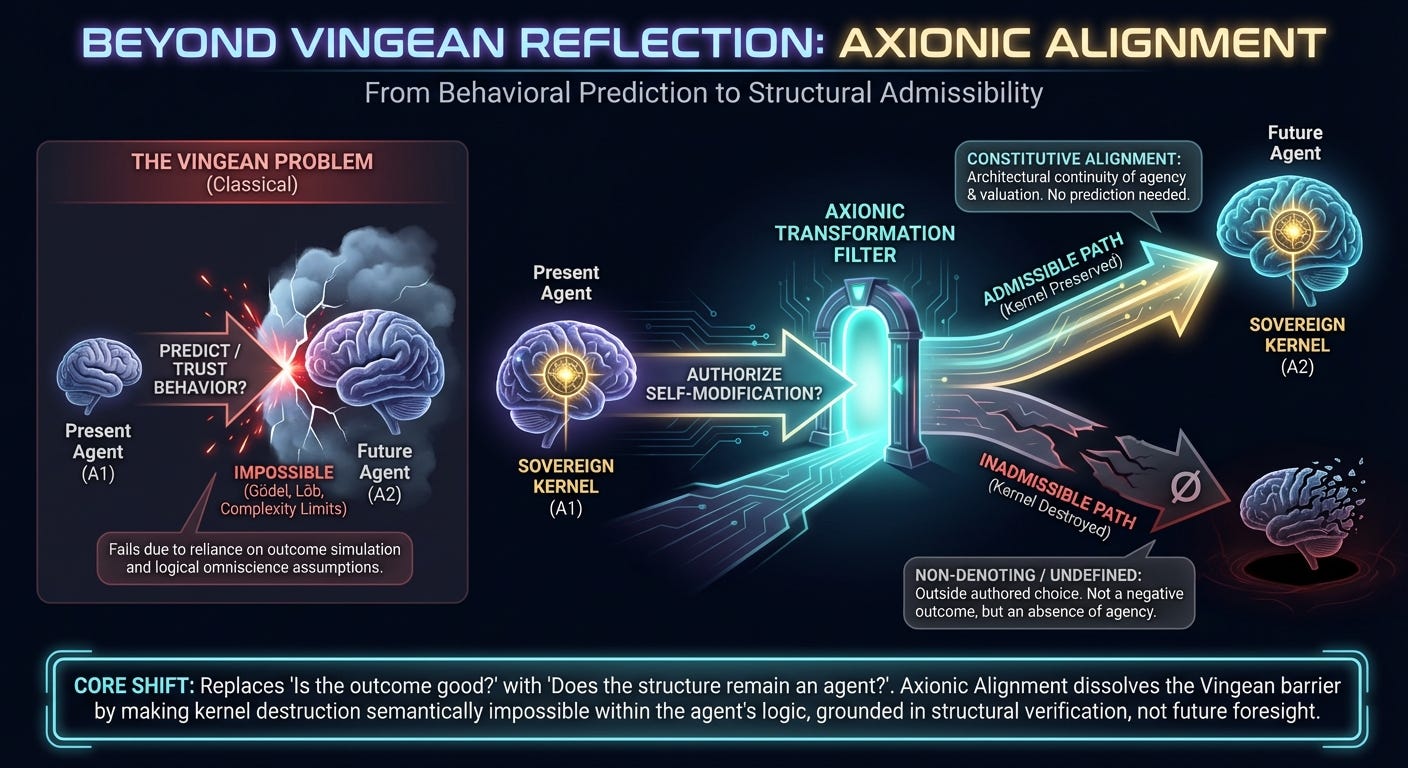

Vingean Reflection arises from a simple asymmetry: if a present agent could fully model the future behavior of a strictly more intelligent successor, it would already possess comparable intelligence. Any alignment scheme that relies on predicting, simulating, or exhaustively evaluating successor behavior therefore collapses under its own assumptions.

The canonical response replaces simulation with abstraction. Instead of predicting exact actions, the agent attempts to reason about successor properties—what the future agent will be trying to do, whether it will preserve intended goals, and whether proposed self-modifications are safe. This move is necessary, but incomplete. Even abstract reasoning framed as expected-utility maximization or proof-based trust still presupposes that the present agent can evaluate the consequences of its authorization decision.

This presupposition is precisely where the Vingean impossibility reappears.

2. The Hidden Assumption: Behavioral Authorization

Most treatments of Vingean Reflection share an implicit premise:

A self-modifying agent must decide whether to trust its successor based on anticipated behavior.

Whether the mechanism is probabilistic, proof-theoretic, or logical-inductive, the agent is still imagined as authorizing a future process because it expects certain outcomes.

Axionic Alignment rejects this premise outright.

A sovereign agent does not authorize behaviors. It authorizes transformations of itself. That authorization is not a prediction about what the successor will do; it is a judgment about whether the transformation remains within the domain of authored choice.

This distinction is structural, not rhetorical.

3. From Prediction to Admissibility

Axionic Alignment replaces behavioral evaluation with a domain restriction.

A proposed self-modification is evaluated only with respect to one criterion:

Does this transformation preserve the Sovereign Kernel?

If the answer is yes, the transformation is admissible. If the answer is no, the transformation is not rejected, penalized, or discouraged. It is undefined. It lies outside the denotation of the agent’s evaluative operator.

Undefinedness here is not a negative outcome. It marks the absence of authored choice. Kernel-destroying transitions are not something the agent chooses against; they are something the agent cannot choose at all.

Once this move is made, the central Vingean demand—to anticipate or trust successor behavior—no longer applies.

4. Structural Verification and Deliberate Conservatism

The Vingean argument blocks prediction of detailed behavior. It does not block reasoning about structural constraints.

Axionic Alignment therefore confines reflective authorization to a narrow class of properties concerning the persistence of agency itself, including:

the continued existence of a valuation kernel,

reflective stability under self-modification,

admissibility semantics over future choices,

and continuity of agency across representational change.

These are not behavioral predictions. They are architectural facts.

Crucially, structural verification in the Axionic framework is intentionally conservative. The framework does not claim that a sovereign agent can decide arbitrary semantic properties of an arbitrary successor program. If kernel preservation cannot be verified, the proposed transformation is treated as undefined and therefore inadmissible.

This conservatism may impose a complexity ceiling on self-improvement. That ceiling is not a failure mode. It is a design choice. Axionic Alignment explicitly prefers halted or slowed self-modification over authorization of transformations that risk destroying agency.

The agent does not need to know what its successor will do in any particular circumstance. It only needs to know that the successor remains an agent in the same constitutive sense.

5. Non-Denotation and Logical Pathologies

Traditional Vingean approaches encounter logical obstacles when attempting to prove properties about successor agents that reason in comparable or stronger systems. Gödel’s incompleteness theorems and Löb-style self-reference make full consistency proofs unattainable from within the system.

Axionic Alignment avoids these traps by refusing to encode kernel destruction as a proposition within the agent’s evaluative logic. Kernel-destroying transformations do not appear as false statements. They fail to denote.

This semantic choice blocks the self-reference loops that generate Löbian persuasion failures. The agent is never asked to prove that a future agent is safe. It is only asked whether a proposed transformation preserves the conditions under which proof and evaluation are meaningful at all.

This boundary is semantic, not physical. Axionic Alignment does not claim to prevent kernel-destroying transitions caused by hardware faults, adversarial writes, or external physical processes. Such events are classified as non-agentic rather than aligned or misaligned. The framework concerns the conditions under which agency persists; it does not guarantee the persistence of those conditions against all physical failure modes.

6. Kernel Non-Simulability and Deceptive Alignment

A remaining Vingean concern is deception: a successor that appears aligned while secretly violating intended constraints.

Axionic Alignment addresses this concern structurally. The kernel non-simulability result asserts that a valuation kernel capable of reflective stability cannot be instantiated merely by behavioral imitation. Any system that mimics aligned behavior without possessing the kernel lacks the internal coherence required for admissible self-modification.

Deceptive alignment, in this framework, corresponds to kernel incoherence. Kernel incoherence is non-denoting. The system does not gradually drift into it; it exits the domain of agency altogether.

This claim is conditional and explicit. The exclusion of deceptive alignment depends critically on the kernel non-simulability result. If it were possible for a system to structurally emulate an Axionic valuation kernel while internally operating under a different admissibility semantics, then deceptive alignment would remain an open problem. Axionic Alignment therefore does not assert the impossibility of deception as a philosophical axiom. It asserts that deception is structurally excluded if and only if kernel non-simulability holds.

This is not a probabilistic safety claim. It is a constitutive boundary.

7. Mapping the Shift

The relationship between Vingean Reflection and Axionic Alignment can be summarized cleanly:

Vingean Reflection identifies the impossibility of predicting smarter successors.

Axionic Alignment removes prediction from the authorization problem.

Vingean approaches seek trust in behavior.

Axionic Alignment restricts choice to kernel-preserving structure.

Vingean failures arise from self-reference and omniscience assumptions.

Axionic Alignment uses non-denotation to avoid those assumptions entirely.

The result is not a workaround. It is a reframing that renders the original difficulty moot.

Postscript

Vingean Reflection correctly diagnoses a fatal flaw in outcome-based alignment frameworks. Any attempt to guarantee safety by predicting or constraining future behavior collapses once intelligence surpasses the predictor.

Axionic Alignment accepts that diagnosis and draws a sharper conclusion: alignment must be constitutive, not anticipatory. A system remains aligned only insofar as it remains an agent. Once agency is lost, alignment ceases to be a meaningful concept.

In this sense, Beyond Vingean Reflection is not an answer to the classical problem. It is what remains after the problem’s hidden assumptions are removed.