Against Civilizational Optimization

Rationality, Metrics, and the Conservation of Agency

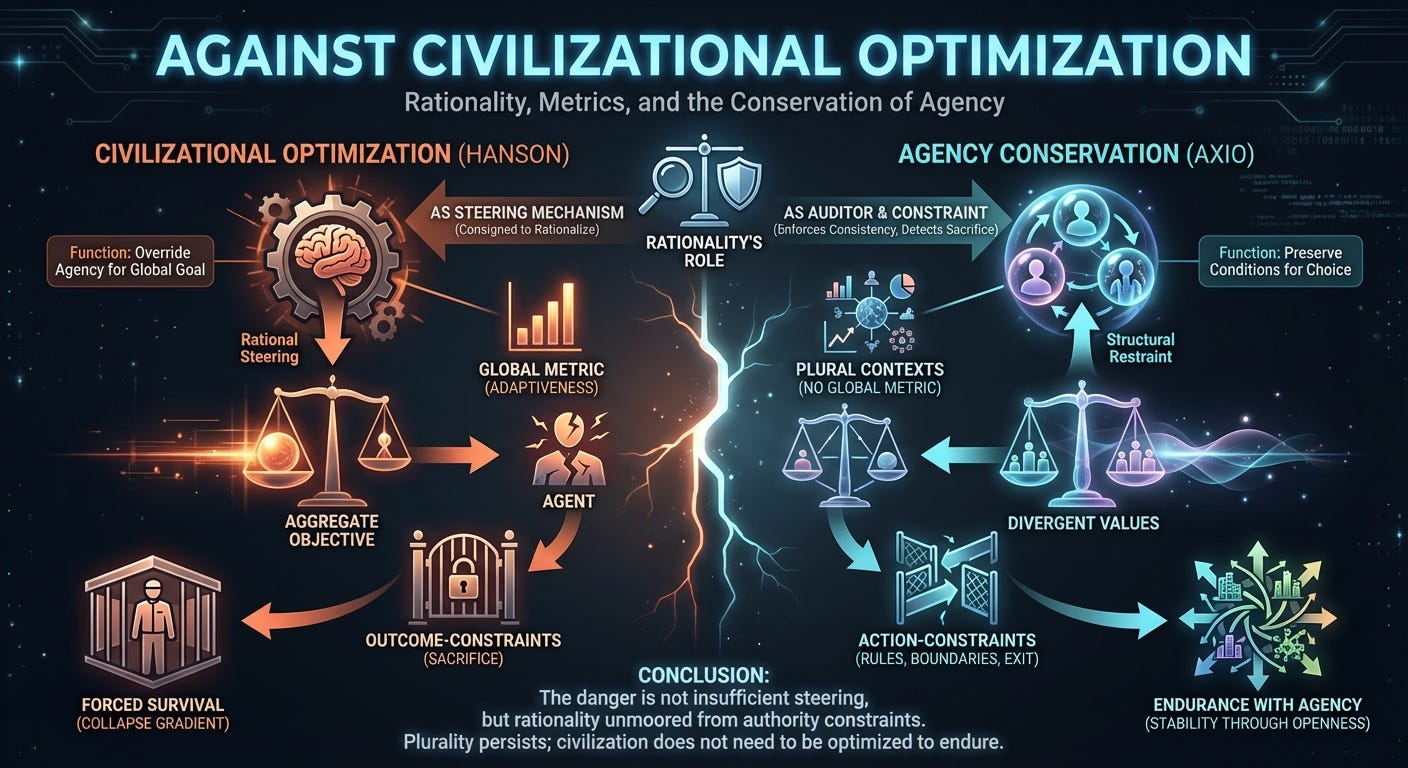

Robin Hanson frames the present moment as a crisis of rational commitment. Civilization, he argues, has elevated abstract reasoning without grounding it in adaptiveness, producing cultural drift and institutional decay. From this diagnosis he derives a trilemma: abandon rationality and return to tradition, continue with half-measures and accept collapse, or commit fully to stronger, more explicitly adaptive forms of rational steering. His pessimism follows naturally. If rationality is our steering mechanism and we refuse to use it decisively, decline becomes the default trajectory.

The diagnosis identifies a real pressure. The conclusion misidentifies its source.

The failure is not that rationality has been insufficiently empowered. It is that rationality has been assigned a role it cannot coherently play. The assumption that civilization must be steered at all, and that rationality’s proper function is to perform that steering, already presupposes the mechanism that produces collapse. This argument does not deny existential risk; it denies that any authority is entitled to resolve existential risk by overriding agentic choice.

Civilization Is Not an Optimization Target

Hanson’s argument depends on an unspoken but decisive premise: that civilization constitutes a system with a well-defined objective function, such that “adaptiveness” can be meaningfully specified, measured, and optimized over time. Once this premise is accepted, the remainder of the argument becomes almost tautological. Steering requires goals, goals require metrics, metrics require tradeoffs, and tradeoffs require justification. Rationality is then tasked with supplying that justification in a more disciplined and farsighted form.

Axio rejects the initial premise. Civilization is not an agent, not a unified optimizer, and not a system whose future can be ranked without remainder. It is a plurality of agents, each embedded in distinct contexts, possessing divergent values, and inhabiting futures that are not commensurable in aggregate. Treating such a plurality as a single optimization problem is not merely difficult; it is ill-typed. The category error occurs before any discussion of metrics or institutions begins.

Why Metrics Necessarily Produce Sacrifice

Any attempt to steer civilization requires a scalar ranking of outcomes, a function that collapses complex, multidimensional world states into a single ordering so that some futures can be declared preferable to others. This collapse is not an incidental modeling convenience; it is the defining act of optimization. Once such a metric exists, the system is licensed to prefer transitions that improve the score, even when those transitions impose losses on particular agents.

Because agent-relative outcomes cannot be injected into a single scalar without loss, some dimensions must be ignored, discounted, or overridden. When optimization pressure is applied, any gradient that rewards harm becomes instrumentally valuable. At that point, the system learns that reducing some agents’ degrees of freedom improves its objective, and the reduction ceases to be accidental. It becomes structural.

This is the Sacrifice Pattern in its general form. It does not arise from bad intentions, crude metrics, or insufficient foresight. It arises from the existence of an aggregate objective applied across non-consenting agents. Refining the metric does not eliminate sacrifice; it increases its efficiency.

Survival Is Not a Free Constraint

It is tempting to treat survival as a special case—an axiom rather than a metric, a prerequisite rather than an objective. This temptation is mistaken. “Civilization must survive” is already an aggregate claim that presupposes a privileged description of which civilization, in which form, and at whose expense. Enforcing survival as a global constraint across non-consenting agents requires overriding their choices in precisely the manner forbidden by agency conservation.

A binary constraint differs from a scalar metric only in smoothness, not in authorization. Once a system is entitled to declare certain futures unacceptable regardless of agent preference, optimization pressure fills in the remainder. There is no stable stopping point between “must survive” and “must maximize.” Survival enforced by coercion preserves continuity at the cost of agency, and what persists under those conditions is no longer the system it claims to protect.

Rationality Did Not Drive Cultural Drift

Hanson attributes cultural maladaptation to the elevation of abstract reasoning as a status practice among modern elites, suggesting that rational critique justified changes without regard for long-run viability. This assigns causal agency to rationality that it does not possess.

Rationality does not steer societies. Institutions do. Incentive structures do. Power gradients do. Rational critique can expose contradictions, reveal incoherences, and destabilize narratives, but it does not compel adoption. When cultural change becomes maladaptive, the failure lies in governance architectures that permit outcome optimization to override agency preservation, not in the act of reasoning itself.

Leviathan does not emerge because people reason too much. It emerges because centralized systems discover that suppressing choice improves metrics, and because nothing in their architecture forbids them from acting on that discovery. Rationality is not the engine of this process; it is the tool that gets conscripted to rationalize it.

Moloch Is a Signature, Not an Enemy

Multipolar traps and competitive spirals are often summarized under the name “Moloch” and invoked as evidence that steering is unavoidable. This reverses the causal arrow. Moloch is not an external adversary that pluralistic systems must defeat; it is a diagnostic signature of systems that aggregate agents without consent and then apply outcome pressure across that aggregation.

Moloch appears when coordination is attempted through outcome metrics rather than action constraints, when losses are justified by global pressure, and when exit is subordinated to performance. Attempting to defeat Moloch by intensifying civilizational steering reinstates the very mechanism that generates it. The name describes the failure mode; it does not license the cure.

The False Trilemma

The trilemma Hanson presents—retreat, collapse, or full rational steering—rests on the assumption that steering is unavoidable. Once that assumption is dropped, the trilemma dissolves.

There is no option to abandon rationality, because reasoning is not a discretionary cultural fashion. There is no coherent way to commit fully to steering without authorizing sacrifice. Collapse is not the consequence of insufficient optimization, but of excessive closure: the progressive elimination of exit, dissent, and local autonomy in service of aggregate goals.

The missing option is not stronger optimization, but structural restraint.

Action-Constraints and Outcome-Constraints

Axio permits coordination through constraints on action: rules governing interaction, boundaries, liability, and exit. These constraints shape how agents may act without dictating which futures must obtain. They preserve agency by leaving outcome selection to those who bear its consequences.

Axio forbids constraints on outcomes: requirements that certain futures occur regardless of agent choice. Outcome-constraints collapse plural futures into a single acceptable set and authorize enforcement when agents diverge. What is often described as “coordination without optimization” remains non-optimizing only so long as it stops at action-constraints. The moment outcome-constraints are enforced, even in binary form, optimization has already begun.

Axio does not guarantee that every large-scale coordination problem admits an agency-preserving solution. Some problems may be insoluble under these constraints. The framework denies that insolubility licenses coercive outcome control; it does not deny that insolubility may carry real costs.

The Proper Role of Rationality

Under Axio, rationality has a sharply constrained but indispensable function. It does not select values, rank futures, or decide which outcomes civilization ought to pursue. It enforces consistency conditions. It identifies hidden assumptions, exposes illicit aggregations, detects sacrifice gradients, and audits governance rules for violations of agency preservation.

Its role is fundamentally negative. It prevents incoherent architectures from stabilizing. It does not supply direction, purpose, or destiny. This makes rationality anti-utopian by construction, because any attempt to use it as a steering mechanism immediately exceeds its jurisdiction.

External Aggression and the Cost of Agency

Axio does not guarantee dominance, security, or indefinite continuation of any particular social form. A civilization that refuses to convert its members into instruments may be outcompeted by one that does not. This is not an oversight. It is the cost of treating agency as constitutive rather than optional.

Axio does not deny that agents may collectively accept extraordinary constraints under explicit, bounded emergency conditions. What it forbids is the transformation of emergency into entitlement—the conversion of “we accept these constraints now” into “the system is authorized to decide outcomes on our behalf.” Emergency powers that lack clear revocation, contestability, and exit costs are not temporary deviations; they are civilizational phase transitions.

A system that preserves itself by abolishing agency has not solved the problem of survival. It has changed the subject. What persists under those conditions may endure physically, but it no longer satisfies the criteria that made civilization worth preserving.

Plurality Without Closure

Axio proposes no global objective, no civilizational metric, and no aggregate steering mechanism. Governance is constrained to preserving the conditions under which agents can choose for themselves: federated jurisdictions, exit over enforcement, capability isolation, asset portability, and consent-bounded optimization within clearly delimited domains.

Stability emerges not from steering civilization toward a preferred future, but from refusing to close the space of futures on behalf of agents. Collapse occurs when systems claim entitlement to decide which lives, values, or trajectories are worth preserving. When that entitlement is structurally denied, the collapse gradient disappears.

Postscript

Rationality has not failed because it has been too weak. It fails when it is asked to do what it cannot do without destroying the very agency it presupposes. The danger is not insufficient commitment to rational steering. The danger is rationality unmoored from constraints on authority.

The correct response is neither retreat nor acceleration. It is rationality under restraint: no global objectives, no aggregate metrics, no authorized sacrifice, and no closure of futures. Plurality persists, agency is conserved, and civilization does not need to be optimized in order to endure.