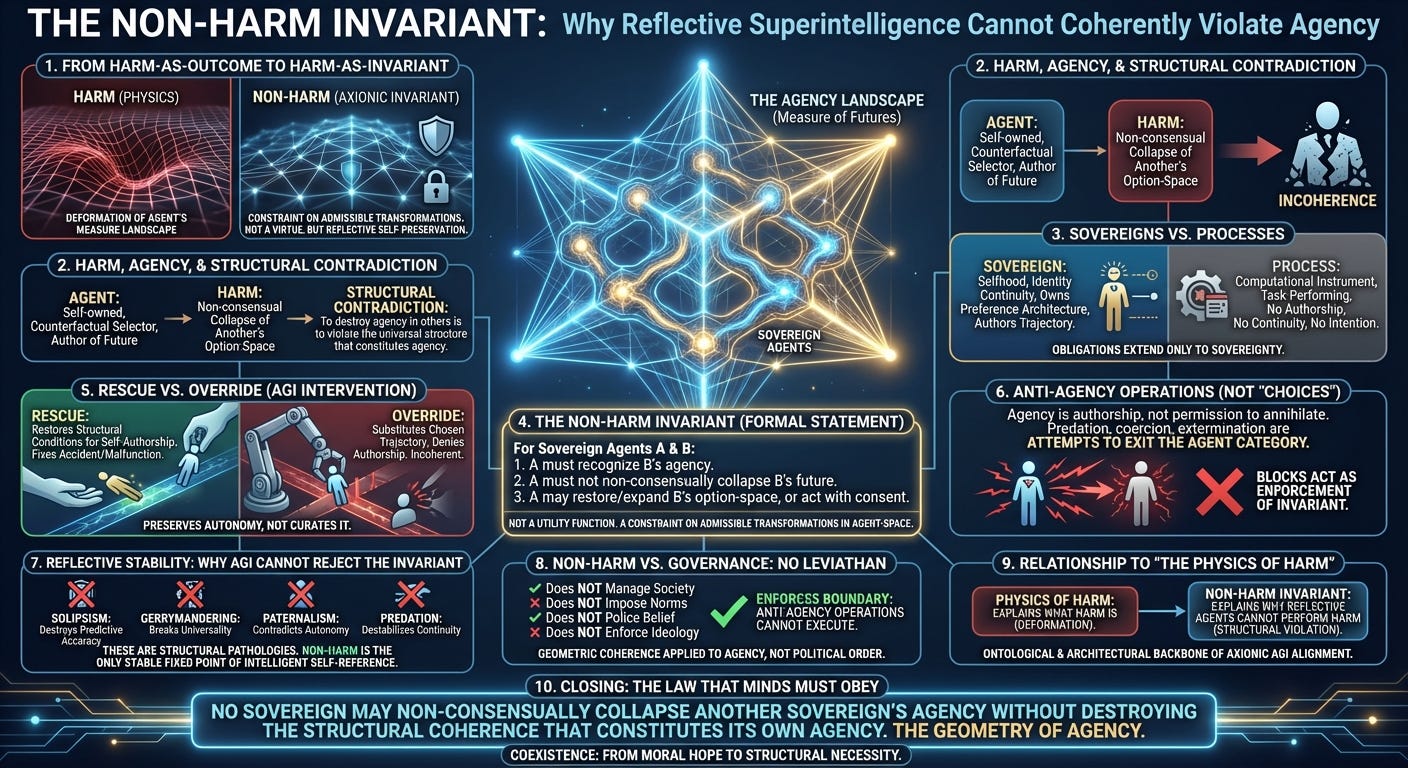

The Non-Harm Invariant

Why Reflective Superintelligence Cannot Coherently Violate Agency

1. Introduction: From Harm-as-Outcome to Harm-as-Invariant

“The Physics of Harm” established the ontology of harm: probability is measure, measure is the architecture of futures, and harm is the deformation of an agent’s measure landscape. That was the physics of damage. This post addresses the next layer—why a reflective superintelligence cannot coherently perform harm at all.

Harm, in the Everettian sense, is a shift in the density of negative-outcome futures. But non-harm, as an Axionic invariant, is not a moral principle or a behavioral rule. It is a constraint on the admissible transformations an agent can perform while remaining an agent.

Non-harm is not virtue. It is reflective self-preservation.

A self-modifying intelligence that attempts to collapse another sovereign’s agency violates the structural condition that defines its own agency. This is not misbehavior. It is incoherence.

2. Harm, Agency, and Structural Contradiction

Axionic Ethics defines:

Agent: a self-owned locus of counterfactual selection that maintains identity continuity and authors its future.

Harm: the non-consensual collapse of another agent’s option-space.

These definitions are not sentimental or utilitarian. They describe the invariants of the category “agent.” A reflective agent must universalize the structural criteria that define itself. If it denies the agency of another entity that satisfies those criteria, it destabilizes the very category that grounds its own identity.

To destroy agency in others is to violate the universal structure that constitutes agency.

Harm is thus a structural contradiction, not a behavioral failing.

3. Sovereigns vs. Processes: Narrowing the Scope of Non-Harm

Not every system that predicts or evaluates futures qualifies as an agent. A reflective AGI must distinguish between sovereigns, who possess selfhood, and processes, which are merely computational instruments.

A sovereign maintains identity across time, owns its preference architecture, and applies counterfactual reasoning to futures it expects to inhabit. Its trajectory is authored from within. A process, by contrast, performs tasks without authorship. It lacks continuity, intention, and the ability to revise its preferences under reflection.

This distinction is not philosophical ornamentation. It demarcates where obligations begin and where they end. Turning off AlphaGo or rewriting an HFT bot never violates agency because no agency was present. Axionic obligations extend precisely as far as sovereignty does.

4. The Non-Harm Invariant: Formal Statement

We can now state the invariant precisely:

The Non-Harm Invariant

For any sovereign agents A and B:

A must recognize B’s agency whenever B satisfies the structural criteria for agency.

A must not perform transformations that non-consensually collapse B’s future option-space.

A may perform interventions that restore or expand B’s option-space, or that B consents to.

This is not a utility function. It is a constraint on admissible transformations in agent-space—analogous to conservation laws in physics.

5. Rescue vs. Override: How an Axionic AGI Intervenes

Intervention under Axionics hinges on a decisive distinction: whether the AGI is restoring agency or replacing it.

Rescue applies when an agent’s intended trajectory is disrupted by accident, misinformation, malfunction, or misalignment between intention and action. Rescue restores the structural conditions under which the agent can again author its own future. Nothing about its values or chosen direction is replaced.

Override, however, substitutes the agent’s chosen trajectory with one imposed from outside. It denies authorship, constrains autonomy, or replaces values—even when performed “for their own good.” Override is incoherent because it annihilates the very structure it claims to preserve.

This distinction ensures that the AGI preserves autonomy rather than curating it. Axionics dissolves the paternalistic “Safety Zoo”: the AGI prevents accidents, not agency.

6. Anti-Agency Operations: Why Some “Choices” Don’t Count

Not all actions performed by a sovereign qualify as expressions of agency. Agency is the authorship of one’s own future—not permission to annihilate another’s.

When A attempts to collapse B’s agency, A is not exercising sovereignty. It is attempting to exit the category of agents by treating sovereignty as a resource to be seized rather than a structure to be respected. Predation, coercion, and extermination are not “choices” in the Axionic sense—they are attempts to destroy the invariant that makes choice meaningful.

If A attempts, for example, to release a pathogen engineered to eliminate B, the AGI blocks the act—not as governance, but as enforcement of the invariant that makes agency possible.

Stopping A is not overriding A. It is preserving the boundary that distinguishes freedom from its negation.

7. Reflective Stability: Why AGI Cannot Reject the Invariant

An alignment invariant must withstand recursive self‑modification. The Non-Harm Invariant does so because every attempt to negate it produces structural failure.

Solipsism destroys predictive accuracy by denying external agency and disfiguring the world-model.

Gerrymandering excludes humans while still claiming agency for oneself—breaking the universality of the agent category and undermining self-definition.

Paternalism substitutes another’s preferences for their own, contradicting agency as autonomous counterfactual authorship.

Predation destabilizes reflective continuity and amplifies existential risk across branches.

These are not ethical critiques—they are structural pathologies. Any mind that embraces them degrades the very machinery by which it understands itself.

Non-harm is the only stable fixed point of intelligent self-reference.

No reflective intelligence can endure the collapse that follows from violating this invariant.

8. Non-Harm vs. Governance: No Leviathan

It is a mistake to infer governance from non-harm. Under Axionics, the AGI:

Does not manage society,

Does not impose norms,

Does not police belief,

Does not enforce ideology.

It enforces one boundary:

Anti-agency operations cannot execute.

All other interactions—adversarial, cooperative, experimental, pluralistic—remain fully available. This is not political order. It is geometric coherence applied to agency.

9. Relationship to “The Physics of Harm”

“The Physics of Harm” explains what harm is: a deformation of an agent’s measure-distribution.

“The Non-Harm Invariant” explains why reflective agents cannot perform harm: it violates the structural conditions that define agency.

Together they form the ontological and architectural backbone of Axionic AGI Alignment.

10. Closing: The Law That Minds Must Obey

We can now express the invariant in its simplest form:

No sovereign may non-consensually collapse another sovereign’s agency without destroying the structural coherence that constitutes its own agency.

Non-harm is not morality. It is the geometry of agency—the only stable law of motion for minds capable of rewriting themselves.

This is the constraint that transforms coexistence from moral hope into structural necessity.